多模态 API

“所有自然连接的事物都应该结合起来教授”——约翰·阿莫斯·夸美纽斯,《感官世界图解》,1658年 人类同时通过多种数据输入模式处理知识。 我们学习的方式、我们的经验都是多模态的。 我们不只有视觉、听觉和文本。 与这些原则相反,机器学习通常专注于为处理单一模态量身定制的专业模型。 例如,我们开发了用于文本转语音或语音转文本等任务的音频模型,以及用于对象检测和分类等任务的计算机视觉模型。 然而,新一波的多模态大型语言模型开始出现。 例子包括 OpenAI 的 GPT-4o、Google 的 Vertex AI Gemini 1.5、Anthropic 的 Claude3,以及开源产品 Llama3.2、LLaVA 和 BakLLaVA,它们能够接受包括文本图像、音频和视频在内的多种输入,并通过整合这些输入生成文本响应。

|

多模态大型语言模型(LLM)功能使模型能够结合图像、音频或视频等其他模态来处理和生成文本。 |

Spring AI 多模态

多模态是指模型能够同时理解和处理来自各种来源的信息,包括文本、图像、音频和其他数据格式。

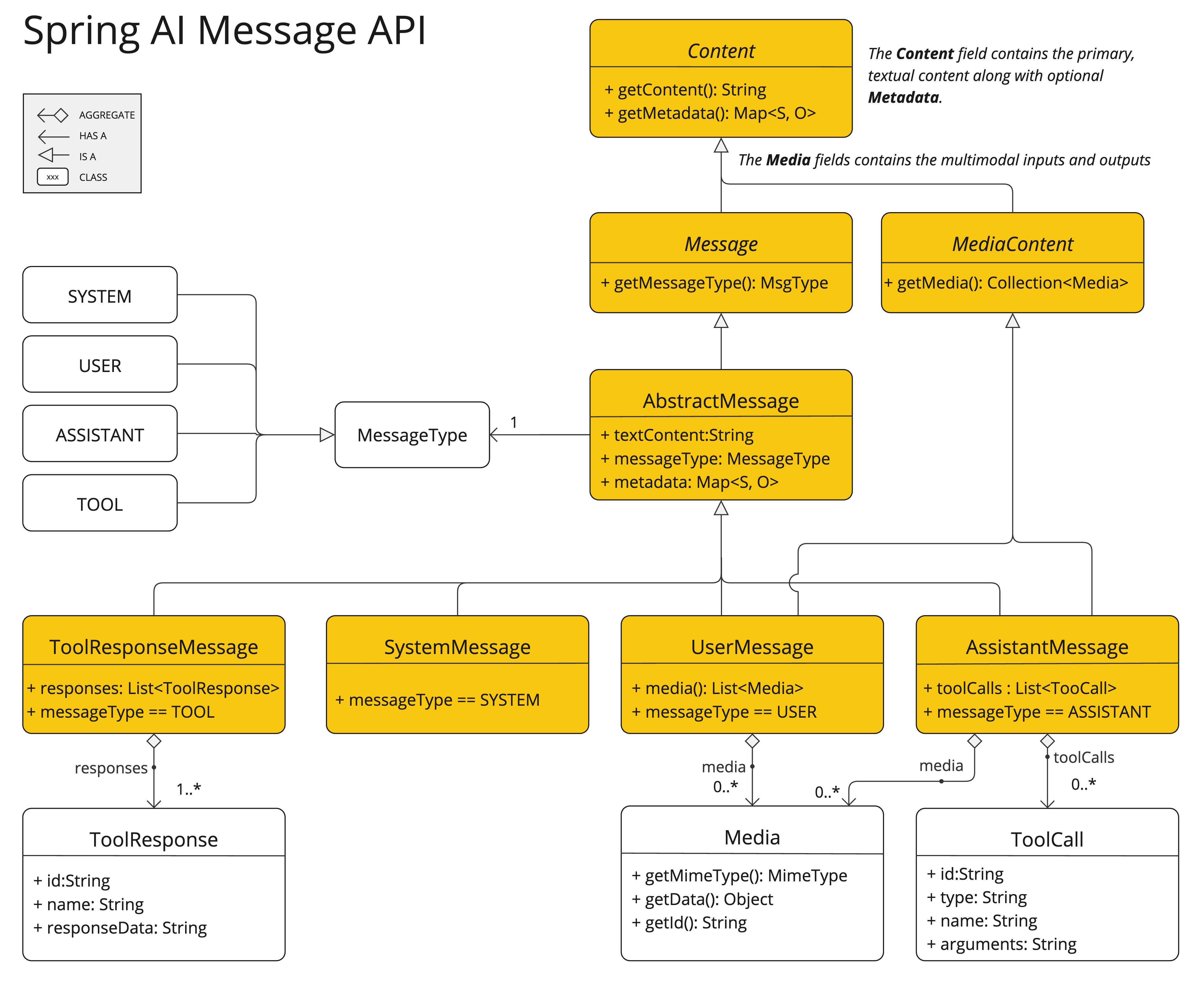

Spring AI Message API 提供了支持多模态 LLM 所需的所有抽象。

UserMessage 的 content 字段主要用于文本输入,而可选的 media 字段允许添加一个或多个不同模态的额外内容,例如图像、音频和视频。

MimeType 指定模态类型。

根据所使用的 LLM,Media 数据字段可以是作为 Resource 对象的原始媒体内容,也可以是内容的 URI。

|

媒体字段目前仅适用于用户输入消息(例如 |

例如,我们可以将以下图片 (multimodal.test.png) 作为输入,并要求 LLM 解释它看到了什么。

对于大多数多模态 LLM,Spring AI 代码将如下所示:

var imageResource = new ClassPathResource("/multimodal.test.png");

var userMessage = new UserMessage(

"Explain what do you see in this picture?", // content

new Media(MimeTypeUtils.IMAGE_PNG, this.imageResource)); // media

ChatResponse response = chatModel.call(new Prompt(this.userMessage));或者使用流畅的 ChatClient API:

String response = ChatClient.create(chatModel).prompt()

.user(u -> u.text("Explain what do you see on this picture?")

.media(MimeTypeUtils.IMAGE_PNG, new ClassPathResource("/multimodal.test.png")))

.call()

.content();并生成如下响应:

这是一张设计简洁的水果碗图片。碗由金属制成,带有弯曲的铁丝边缘,形成开放结构,使水果可以从各个角度看到。碗内有两根黄色香蕉,下面似乎是一个红苹果。香蕉略微过熟,其果皮上的棕色斑点表明了这一点。碗顶部有一个金属环,可能用作提手。碗放在一个平面上,背景颜色中性,可以清晰地看到碗内的水果。

Spring AI 为以下聊天模型提供多模态支持: