OpenAI 聊天

Spring AI 支持 OpenAI 提供的各种 AI 语言模型,OpenAI 是 ChatGPT 背后的公司,凭借其创建行业领先的文本生成模型和嵌入,在激发人们对 AI 驱动的文本生成兴趣方面发挥了关键作用。

先决条件

您需要使用 OpenAI 创建一个 API 才能访问 ChatGPT 模型。

在 OpenAI 注册页面 创建一个帐户,并在 API 密钥页面 生成令牌。

Spring AI 项目定义了一个名为 spring.ai.openai.api-key 的配置属性,您应该将其设置为从 openai.com 获取的 API 密钥 的值。

您可以在 application.properties 文件中设置此配置属性:

spring.ai.openai.api-key=<your-openai-api-key>为了在处理 API 密钥等敏感信息时增强安全性,您可以使用 Spring Expression Language (SpEL) 引用自定义环境变量:

# In application.yml

spring:

ai:

openai:

api-key: ${OPENAI_API_KEY}# In your environment or .env file

export OPENAI_API_KEY=<your-openai-api-key>您也可以在应用程序代码中以编程方式设置此配置:

// Retrieve API key from a secure source or environment variable

String apiKey = System.getenv("OPENAI_API_KEY");自动配置

|

Spring AI 自动配置、启动器模块的工件名称发生了重大变化。 请参阅 升级说明 以获取更多信息。 |

Spring AI 为 OpenAI 聊天客户端提供了 Spring Boot 自动配置。

要启用它,请将以下依赖项添加到您的项目的 Maven pom.xml 或 Gradle build.gradle 构建文件中:

-

Maven

-

Gradle

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-openai</artifactId>

</dependency>dependencies {

implementation 'org.springframework.ai:spring-ai-starter-model-openai'

}|

请参阅 依赖管理 部分,将 Spring AI BOM 添加到您的构建文件。 |

聊天属性

重试属性

前缀 spring.ai.retry 用作属性前缀,允许您配置 OpenAI 聊天模型的重试机制。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.retry.max-attempts |

最大重试次数。 |

10 |

spring.ai.retry.backoff.initial-interval |

指数退避策略的初始休眠持续时间。 |

2 秒 |

spring.ai.retry.backoff.multiplier |

退避间隔乘数。 |

5 |

spring.ai.retry.backoff.max-interval |

最大退避持续时间。 |

3 分钟 |

spring.ai.retry.on-client-errors |

如果为 false,则抛出 NonTransientAiException,并且不尝试重试 |

false |

spring.ai.retry.exclude-on-http-codes |

不应触发重试的 HTTP 状态代码列表(例如,抛出 NonTransientAiException)。 |

空 |

spring.ai.retry.on-http-codes |

应触发重试的 HTTP 状态代码列表(例如,抛出 TransientAiException)。 |

空 |

连接属性

前缀 spring.ai.openai 用作属性前缀,允许您连接到 OpenAI。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.openai.base-url |

要连接的 URL |

[role="bare"][role="bare"][role="bare"]https://api.openai.com |

spring.ai.openai.api-key |

API 密钥 |

- |

spring.ai.openai.organization-id |

(可选)您可以指定用于 API 请求的组织。 |

- |

spring.ai.openai.project-id |

(可选)您可以指定用于 API 请求的项目。 |

- |

|

对于属于多个组织(或通过其旧版用户 API 密钥访问其项目)的用户,您可以选择指定用于 API 请求的组织和项目。 这些 API 请求的使用将计为指定组织和项目的使用。 |

配置属性

|

聊天自动配置的启用和禁用现在通过前缀为 |

前缀 spring.ai.openai.chat 是属性前缀,允许您为 OpenAI 配置聊天模型实现。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.openai.chat.enabled (已移除且不再有效) |

启用 OpenAI 聊天模型。 |

true |

spring.ai.model.chat |

启用 OpenAI 聊天模型。 |

openai |

spring.ai.openai.chat.base-url |

|

- |

spring.ai.openai.chat.completions-path |

要附加到基本 URL 的路径。 |

|

spring.ai.openai.chat.api-key |

|

- |

spring.ai.openai.chat.organization-id |

(可选)您可以指定用于 API 请求的组织。 |

- |

spring.ai.openai.chat.project-id |

(可选)您可以指定用于 API 请求的项目。 |

- |

spring.ai.openai.chat.options.model |

要使用的 OpenAI 聊天模型的名称。您可以选择的模型包括: |

|

spring.ai.openai.chat.options.temperature |

用于控制生成完成的表观创造力的采样温度。较高的值将使输出更随机,而较低的值将使结果更集中和确定。不建议为相同的完成请求修改 |

0.8 |

spring.ai.openai.chat.options.frequencyPenalty |

介于 -2.0 和 2.0 之间的数字。正值会根据新令牌在文本中出现的现有频率对其进行惩罚,从而降低模型重复相同行的可能性。 |

0.0f |

spring.ai.openai.chat.options.logitBias |

修改指定令牌出现在完成中的可能性。 |

- |

spring.ai.openai.chat.options.maxTokens |

聊天完成中要生成的最大令牌数。输入令牌和生成令牌的总长度受模型的上下文长度限制。用于非推理模型(例如 gpt-4o、gpt-3.5-turbo)。不能与推理模型一起使用(例如 o1、o3、o4-mini 系列)。与 maxCompletionTokens 互斥 - 同时设置两者将导致 API 错误。 |

- |

spring.ai.openai.chat.options.maxCompletionTokens |

完成可以生成的令牌数的上限,包括可见输出令牌和推理令牌。推理模型必需(例如 o1、o3、o4-mini 系列)。不能与非推理模型一起使用(例如 gpt-4o、gpt-3.5-turbo)。与 maxTokens 互斥 - 同时设置两者将导致 API 错误。 |

- |

spring.ai.openai.chat.options.n |

为每个输入消息生成多少个聊天完成选项。请注意,您将根据所有选项生成的令牌数进行计费。将 |

1 |

spring.ai.openai.chat.options.store |

是否存储此聊天完成请求的输出以供我们的模型使用 |

false |

spring.ai.openai.chat.options.metadata |

开发人员定义的标签和值,用于在聊天完成仪表板中筛选完成 |

空映射 |

spring.ai.openai.chat.options.output-modalities |

您希望模型为此请求生成的输出类型。大多数模型都能够生成文本,这是默认值。

|

- |

spring.ai.openai.chat.options.output-audio |

音频生成的音频参数。在请求 |

- |

spring.ai.openai.chat.options.presencePenalty |

介于 -2.0 和 2.0 之间的数字。正值会根据新令牌是否出现在文本中对其进行惩罚,从而增加模型谈论新主题的可能性。 |

- |

spring.ai.openai.chat.options.responseFormat.type |

兼容 |

- |

spring.ai.openai.chat.options.responseFormat.name |

响应格式模式名称。仅适用于 |

custom_schema |

spring.ai.openai.chat.options.responseFormat.schema |

响应格式 JSON 模式。仅适用于 |

- |

spring.ai.openai.chat.options.responseFormat.strict |

响应格式 JSON 模式严格性。仅适用于 |

- |

spring.ai.openai.chat.options.seed |

此功能处于 Beta 阶段。如果指定,我们的系统将尽力确定性地采样,以便具有相同种子和参数的重复请求应返回相同的结果。 |

- |

spring.ai.openai.chat.options.stop |

最多 4 个序列,API 将停止生成进一步的令牌。 |

- |

spring.ai.openai.chat.options.topP |

采样温度的替代方案,称为核采样,模型考虑具有 |

- |

spring.ai.openai.chat.options.tools |

模型可以调用的工具列表。目前,仅支持将函数作为工具。使用此项提供模型可以生成 JSON 输入的函数列表。 |

- |

spring.ai.openai.chat.options.toolChoice |

控制模型调用哪个(如果有)函数。 |

- |

spring.ai.openai.chat.options.user |

代表您的最终用户的唯一标识符,可以帮助 OpenAI 监控和检测滥用。 |

- |

spring.ai.openai.chat.options.functions |

函数列表,通过其名称标识,用于在单个提示请求中启用函数调用。具有这些名称的函数必须存在于 |

- |

spring.ai.openai.chat.options.stream-usage |

(仅用于流式传输)设置为添加一个额外的块,其中包含整个请求的令牌使用统计信息。此块的 |

false |

spring.ai.openai.chat.options.parallel-tool-calls |

在工具使用期间是否启用 并行函数调用。 |

true |

spring.ai.openai.chat.options.http-headers |

可选的 HTTP 头,将添加到聊天完成请求中。要覆盖 |

- |

spring.ai.openai.chat.options.proxy-tool-calls |

如果为 true,Spring AI 将不会在内部处理函数调用,而是将它们代理到客户端。然后客户端负责处理函数调用,将它们分派到适当的函数,并返回结果。如果为 false(默认值),Spring AI 将在内部处理函数调用。仅适用于支持函数调用的聊天模型 |

false |

|

使用 |

|

您可以为 |

|

所有前缀为 |

令牌限制参数:模型特定用法

OpenAI 提供了两个互斥的参数来控制令牌生成限制:

| 参数 | 用例 | 兼容模型 |

|---|---|---|

|

非推理模型 |

gpt-4o, gpt-4o-mini, gpt-4-turbo, gpt-3.5-turbo |

|

推理模型 |

o1, o1-mini, o1-preview, o3, o4-mini 系列 |

这些参数是*互斥的*。同时设置两者将导致 OpenAI API 错误。

用法示例

对于非推理模型(gpt-4o、gpt-3.5-turbo):

ChatResponse response = chatModel.call(

new Prompt(

"Explain quantum computing in simple terms.",

OpenAiChatOptions.builder()

.model("gpt-4o")

.maxTokens(150) // Use maxTokens for non-reasoning models

.build()

));对于推理模型(o1、o3 系列):

ChatResponse response = chatModel.call(

new Prompt(

"Solve this complex math problem step by step: ...",

OpenAiChatOptions.builder()

.model("o1-preview")

.maxCompletionTokens(1000) // Use maxCompletionTokens for reasoning models

.build()

));构建器模式验证: OpenAI ChatOptions 构建器会自动通过“后设者胜”的方法强制互斥:

// This will automatically clear maxTokens and use maxCompletionTokens

OpenAiChatOptions options = OpenAiChatOptions.builder()

.maxTokens(100) // Set first

.maxCompletionTokens(200) // This clears maxTokens and logs a warning

.build();

// Result: maxTokens = null, maxCompletionTokens = 200运行时选项

OpenAiChatOptions.java 类提供了模型配置,例如要使用的模型、温度、频率惩罚等。

在启动时,可以使用 OpenAiChatModel(api, options) 构造函数或 spring.ai.openai.chat.options.* 属性配置默认选项。

在运行时,您可以通过向 Prompt 调用添加新的请求特定选项来覆盖默认选项。

例如,要为特定请求覆盖默认模型和温度:

ChatResponse response = chatModel.call(

new Prompt(

"Generate the names of 5 famous pirates.",

OpenAiChatOptions.builder()

.model("gpt-4o")

.temperature(0.4)

.build()

));|

除了模型特定的 OpenAiChatOptions 之外,您还可以使用通过 ChatOptions#builder() 创建的可移植 ChatOptions 实例。 |

函数调用

您可以使用 OpenAiChatModel 注册自定义 Java 函数,并让 OpenAI 模型智能地选择输出一个 JSON 对象,其中包含调用一个或多个已注册函数的参数。

这是一种将 LLM 功能与外部工具和 API 连接起来的强大技术。

阅读更多关于 工具调用 的信息。

多模态

多模态是指模型同时理解和处理来自各种来源(包括文本、图像、音频和其他数据格式)信息的能力。 OpenAI 支持文本、视觉和音频输入模态。

视觉

提供视觉多模态支持的 OpenAI 模型包括 gpt-4、gpt-4o 和 gpt-4o-mini。

有关更多信息,请参阅 视觉 指南。

OpenAI 的 用户消息 API 可以将 base64 编码图像或图像 URL 列表与消息一起包含。

Spring AI 的 Message 接口通过引入 Media 类型来促进多模态 AI 模型。

此类型包含消息中媒体附件的数据和详细信息,使用 Spring 的 org.springframework.util.MimeType 和 org.springframework.core.io.Resource 用于原始媒体数据。

以下是从 OpenAiChatModelIT.java 摘录的代码示例,说明了使用 gpt-4o 模型将用户文本与图像融合。

var imageData = new ClassPathResource("/test.png");

var userMessage = UserMessage.builder()

.text("Explain what do you see on this picture?")

.media(List.of(new Media(MimeTypeUtils.IMAGE_PNG, imageData)))

.build();

var response = this.chatModel

.call(new Prompt(List.of(userMessage), OpenAiChatOptions.builder().model(modelName).build()));|

GPT_4_VISION_PREVIEW 将仅在 2024 年 6 月 17 日之后继续向现有用户提供此模型。如果您不是现有用户,请使用 GPT_4_O 或 GPT_4_TURBO 模型。更多详细信息请参见 此处。 |

或使用 gpt-4o 模型的图像 URL 等效项:

var userMessage = UserMessage.builder()

.text("Explain what do you see on this picture?")

.media(List.of(Media.builder()

.mimeType(MimeTypeUtils.IMAGE_PNG)

.data(URI.create("https://docs.spring.io/spring-ai/reference/_images/multimodal.test.png"))

.build()))

.build();

ChatResponse response = this.chatModel

.call(new Prompt(List.of(userMessage), OpenAiChatOptions.builder().model(modelName).build()));|

您也可以传递多张图像。 |

该示例显示了一个模型,它将 multimodal.test.png 图像作为输入:

以及文本消息“解释你在这张图片上看到了什么?”,并生成如下响应:

This is an image of a fruit bowl with a simple design. The bowl is made of metal with curved wire edges that create an open structure, allowing the fruit to be visible from all angles. Inside the bowl, there are two yellow bananas resting on top of what appears to be a red apple. The bananas are slightly overripe, as indicated by the brown spots on their peels. The bowl has a metal ring at the top, likely to serve as a handle for carrying. The bowl is placed on a flat surface with a neutral-colored background that provides a clear view of the fruit inside.

音频

提供输入音频多模态支持的 OpenAI 模型包括 gpt-4o-audio-preview。

有关更多信息,请参阅 音频 指南。

OpenAI 的 用户消息 API 可以将 base64 编码的音频文件列表与消息一起包含。

Spring AI 的 Message 接口通过引入 Media 类型来促进多模态 AI 模型。

此类型包含消息中媒体附件的数据和详细信息,使用 Spring 的 org.springframework.util.MimeType 和 org.springframework.core.io.Resource 用于原始媒体数据。

目前,OpenAI 仅支持以下媒体类型:audio/mp3 和 audio/wav。

以下是从 OpenAiChatModelIT.java 摘录的代码示例,说明了使用 gpt-4o-audio-preview 模型将用户文本与音频文件融合。

var audioResource = new ClassPathResource("speech1.mp3");

var userMessage = new UserMessage("What is this recording about?",

List.of(new Media(MimeTypeUtils.parseMimeType("audio/mp3"), audioResource)));

ChatResponse response = chatModel.call(new Prompt(List.of(userMessage),

OpenAiChatOptions.builder().model(OpenAiApi.ChatModel.GPT_4_O_AUDIO_PREVIEW).build()));|

您也可以传递多个音频文件。 |

输出音频

提供输入音频多模态支持的 OpenAI 模型包括 gpt-4o-audio-preview。

有关更多信息,请参阅 音频 指南。

OpenAI 的 助手消息 API 可以包含 base64 编码的音频文件列表与消息。

Spring AI 的 Message 接口通过引入 Media 类型来促进多模态 AI 模型。

此类型包含消息中媒体附件的数据和详细信息,使用 Spring 的 org.springframework.util.MimeType 和 org.springframework.core.io.Resource 用于原始媒体数据。

目前,OpenAI 仅支持以下音频类型:audio/mp3 和 audio/wav。

以下是一个代码示例,说明了用户文本以及音频字节数组的响应,使用 gpt-4o-audio-preview 模型:

var userMessage = new UserMessage("Tell me joke about Spring Framework");

ChatResponse response = chatModel.call(new Prompt(List.of(userMessage),

OpenAiChatOptions.builder()

.model(OpenAiApi.ChatModel.GPT_4_O_AUDIO_PREVIEW)

.outputModalities(List.of("text", "audio"))

.outputAudio(new AudioParameters(Voice.ALLOY, AudioResponseFormat.WAV))

.build()));

String text = response.getResult().getOutput().getContent(); // audio transcript

byte[] waveAudio = response.getResult().getOutput().getMedia().get(0).getDataAsByteArray(); // audio data您必须在 OpenAiChatOptions 中指定 audio 模态才能生成音频输出。

AudioParameters 类提供音频输出的语音和音频格式。

结构化输出

OpenAI 提供了自定义的 结构化输出 API,可确保您的模型生成严格符合您提供的 JSON Schema 的响应。

除了现有的 Spring AI 模型无关的 结构化输出转换器 之外,这些 API 还提供了增强的控制和精度。

|

目前,OpenAI 支持 JSON Schema 语言的子集 格式。 |

配置

Spring AI 允许您使用 OpenAiChatOptions 构建器以编程方式或通过应用程序属性配置响应格式。

使用聊天选项构建器

您可以使用 OpenAiChatOptions 构建器以编程方式设置响应格式,如下所示:

String jsonSchema = """

{

"type": "object",

"properties": {

"steps": {

"type": "array",

"items": {

"type": "object",

"properties": {

"explanation": { "type": "string" },

"output": { "type": "string" }

},

"required": ["explanation", "output"],

"additionalProperties": false

}

},

"final_answer": { "type": "string" }

},

"required": ["steps", "final_answer"],

"additionalProperties": false

}

""";

Prompt prompt = new Prompt("how can I solve 8x + 7 = -23",

OpenAiChatOptions.builder()

.model(ChatModel.GPT_4_O_MINI)

.responseFormat(new ResponseFormat(ResponseFormat.Type.JSON_SCHEMA, this.jsonSchema))

.build());

ChatResponse response = this.openAiChatModel.call(this.prompt);|

请遵循 OpenAI JSON Schema 语言的子集 格式。 |

与 BeanOutputConverter 实用程序集成

您可以利用现有的 BeanOutputConverter 实用程序,从您的领域对象自动生成 JSON Schema,然后将结构化响应转换为领域特定的实例:

-

Java

-

Kotlin

record MathReasoning(

@JsonProperty(required = true, value = "steps") Steps steps,

@JsonProperty(required = true, value = "final_answer") String finalAnswer) {

record Steps(

@JsonProperty(required = true, value = "items") Items[] items) {

record Items(

@JsonProperty(required = true, value = "explanation") String explanation,

@JsonProperty(required = true, value = "output") String output) {

}

}

}

var outputConverter = new BeanOutputConverter<>(MathReasoning.class);

var jsonSchema = this.outputConverter.getJsonSchema();

Prompt prompt = new Prompt("how can I solve 8x + 7 = -23",

OpenAiChatOptions.builder()

.model(ChatModel.GPT_4_O_MINI)

.responseFormat(new ResponseFormat(ResponseFormat.Type.JSON_SCHEMA, this.jsonSchema))

.build());

ChatResponse response = this.openAiChatModel.call(this.prompt);

String content = this.response.getResult().getOutput().getContent();

MathReasoning mathReasoning = this.outputConverter.convert(this.content);data class MathReasoning(

val steps: Steps,

@get:JsonProperty(value = "final_answer") val finalAnswer: String) {

data class Steps(val items: Array<Items>) {

data class Items(

val explanation: String,

val output: String)

}

}

val outputConverter = BeanOutputConverter(MathReasoning::class.java)

val jsonSchema = outputConverter.jsonSchema;

val prompt = Prompt("how can I solve 8x + 7 = -23",

OpenAiChatOptions.builder()

.model(ChatModel.GPT_4_O_MINI)

.responseFormat(ResponseFormat(ResponseFormat.Type.JSON_SCHEMA, jsonSchema))

.build())

val response = openAiChatModel.call(prompt)

val content = response.getResult().getOutput().getContent()

val mathReasoning = outputConverter.convert(content)通过应用程序属性配置

或者,在使用 OpenAI 自动配置时,您可以通过以下应用程序属性配置所需的响应格式:

spring.ai.openai.api-key=YOUR_API_KEY

spring.ai.openai.chat.options.model=gpt-4o-mini

spring.ai.openai.chat.options.response-format.type=JSON_SCHEMA

spring.ai.openai.chat.options.response-format.name=MySchemaName

spring.ai.openai.chat.options.response-format.schema={"type":"object","properties":{"steps":{"type":"array","items":{"type":"object","properties":{"explanation":{"type":"string"},"output":{"type":"string"}},"required":["explanation","output"],"additionalProperties":false}},"final_answer":{"type":"string"}},"required":["steps","final_answer"],"additionalProperties":false}

spring.ai.openai.chat.options.response-format.strict=true示例控制器

创建 一个新的 Spring Boot 项目,并将 spring-ai-starter-model-openai 添加到您的 pom(或 gradle)依赖项中。

在 src/main/resources 目录下添加一个 application.properties 文件,以启用和配置 OpenAi 聊天模型:

spring.ai.openai.api-key=YOUR_API_KEY

spring.ai.openai.chat.options.model=gpt-4o

spring.ai.openai.chat.options.temperature=0.7|

将 |

这将创建一个 OpenAiChatModel 实现,您可以将其注入到您的类中。

这是一个简单的 @RestController 类的示例,它使用聊天模型进行文本生成。

@RestController

public class ChatController {

private final OpenAiChatModel chatModel;

@Autowired

public ChatController(OpenAiChatModel chatModel) {

this.chatModel = chatModel;

}

@GetMapping("/ai/generate")

public Map<String,String> generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

return Map.of("generation", this.chatModel.call(message));

}

@GetMapping("/ai/generateStream")

public Flux<ChatResponse> generateStream(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

Prompt prompt = new Prompt(new UserMessage(message));

return this.chatModel.stream(prompt);

}

}手动配置

OpenAiChatModel 实现了 ChatModel 和 StreamingChatModel,并使用 低级 OpenAiApi 客户端 连接到 OpenAI 服务。

将 spring-ai-openai 依赖项添加到您的项目的 Maven pom.xml 文件中:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai</artifactId>

</dependency>或添加到您的 Gradle build.gradle 构建文件中。

dependencies {

implementation 'org.springframework.ai:spring-ai-openai'

}|

请参阅 依赖管理 部分,将 Spring AI BOM 添加到您的构建文件。 |

接下来,创建一个 OpenAiChatModel 并将其用于文本生成:

var openAiApi = OpenAiApi.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.build();

var openAiChatOptions = OpenAiChatOptions.builder()

.model("gpt-3.5-turbo")

.temperature(0.4)

.maxTokens(200)

.build();

var chatModel = new OpenAiChatModel(this.openAiApi, this.openAiChatOptions);

ChatResponse response = this.chatModel.call(

new Prompt("Generate the names of 5 famous pirates."));

// Or with streaming responses

Flux<ChatResponse> response = this.chatModel.stream(

new Prompt("Generate the names of 5 famous pirates."));OpenAiChatOptions 提供聊天请求的配置信息。

OpenAiApi.Builder 和 OpenAiChatOptions.Builder 分别是用于 API 客户端和聊天配置的流畅选项构建器。

低级 OpenAiApi 客户端

OpenAiApi 为 OpenAI Chat API OpenAI Chat API 提供了轻量级 Java 客户端。

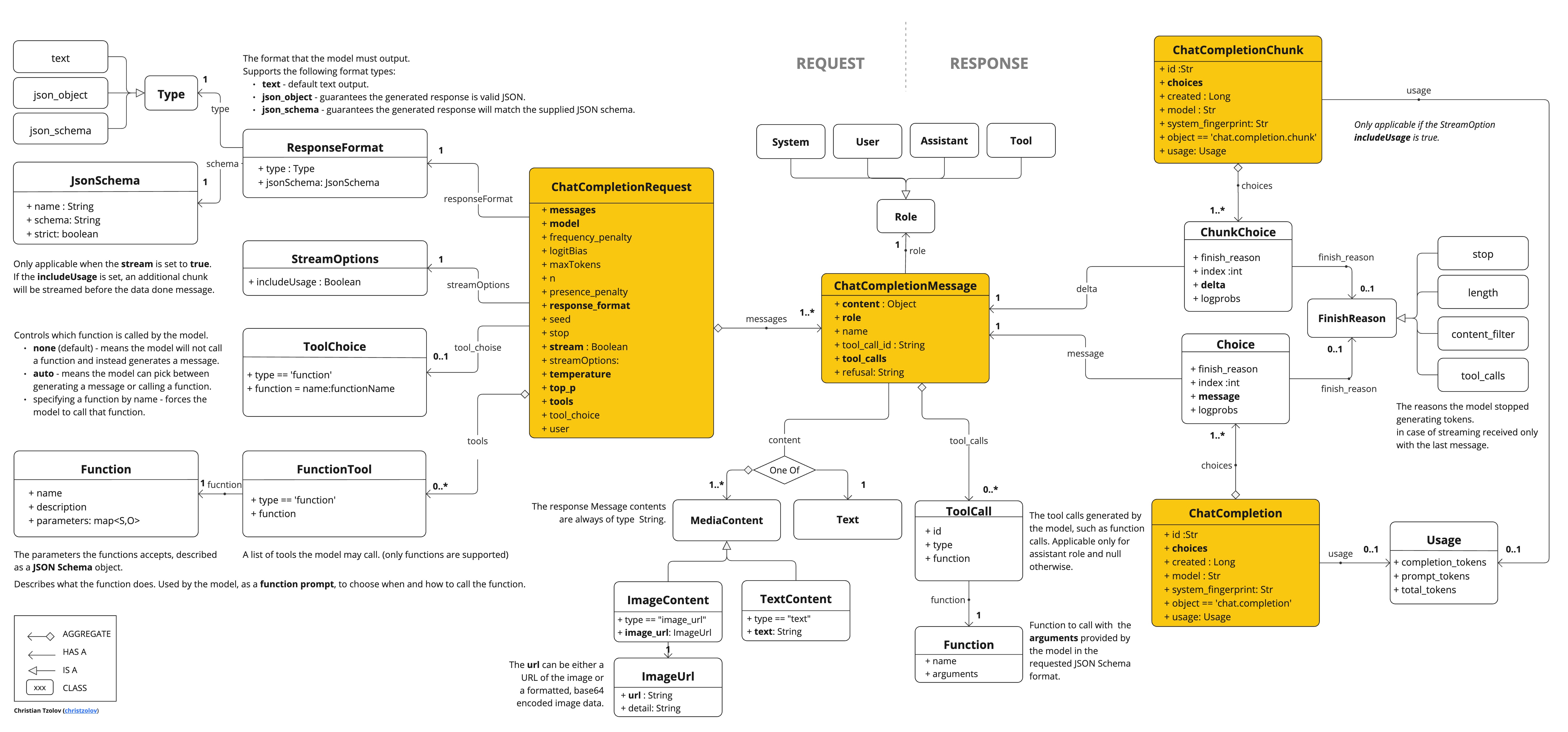

以下类图说明了 OpenAiApi 聊天接口和构建块:

这是一个简单的代码片段,展示了如何以编程方式使用 API:

OpenAiApi openAiApi = OpenAiApi.builder()

.apiKey(System.getenv("OPENAI_API_KEY"))

.build();

ChatCompletionMessage chatCompletionMessage =

new ChatCompletionMessage("Hello world", Role.USER);

// Sync request

ResponseEntity<ChatCompletion> response = this.openAiApi.chatCompletionEntity(

new ChatCompletionRequest(List.of(this.chatCompletionMessage), "gpt-3.5-turbo", 0.8, false));

// Streaming request

Flux<ChatCompletionChunk> streamResponse = this.openAiApi.chatCompletionStream(

new ChatCompletionRequest(List.of(this.chatCompletionMessage), "gpt-3.5-turbo", 0.8, true));请遵循 OpenAiApi.java 的 JavaDoc 以获取更多信息。

低级 API 示例

-

OpenAiApiIT.java 测试提供了一些如何使用轻量级库的通用示例。

-

OpenAiApiToolFunctionCallIT.java 测试展示了如何使用低级 API 调用工具函数。 基于 OpenAI 函数调用 教程。

API 密钥管理

Spring AI 通过 ApiKey 接口及其实现提供了灵活的 API 密钥管理。默认实现 SimpleApiKey 适用于大多数用例,但您也可以为更复杂的场景创建自定义实现。

默认配置

默认情况下,Spring Boot 自动配置将使用 spring.ai.openai.api-key 属性创建一个 API 密钥 bean:

spring.ai.openai.api-key=your-api-key-here自定义 API 密钥配置

您可以使用构建器模式创建具有您自己的 ApiKey 实现的 OpenAiApi 自定义实例:

ApiKey customApiKey = new ApiKey() {

@Override

public String getValue() {

// Custom logic to retrieve API key

return "your-api-key-here";

}

};

OpenAiApi openAiApi = OpenAiApi.builder()

.apiKey(customApiKey)

.build();

// Create a chat model with the custom OpenAiApi instance

OpenAiChatModel chatModel = OpenAiChatModel.builder()

.openAiApi(openAiApi)

.build();

// Build the ChatClient using the custom chat model

ChatClient openAiChatClient = ChatClient.builder(chatModel).build();当您需要以下情况时,这很有用:

-

从安全密钥存储中检索 API 密钥

-

动态轮换 API 密钥

-

实现自定义 API 密钥选择逻辑