VertexAI Gemini 聊天

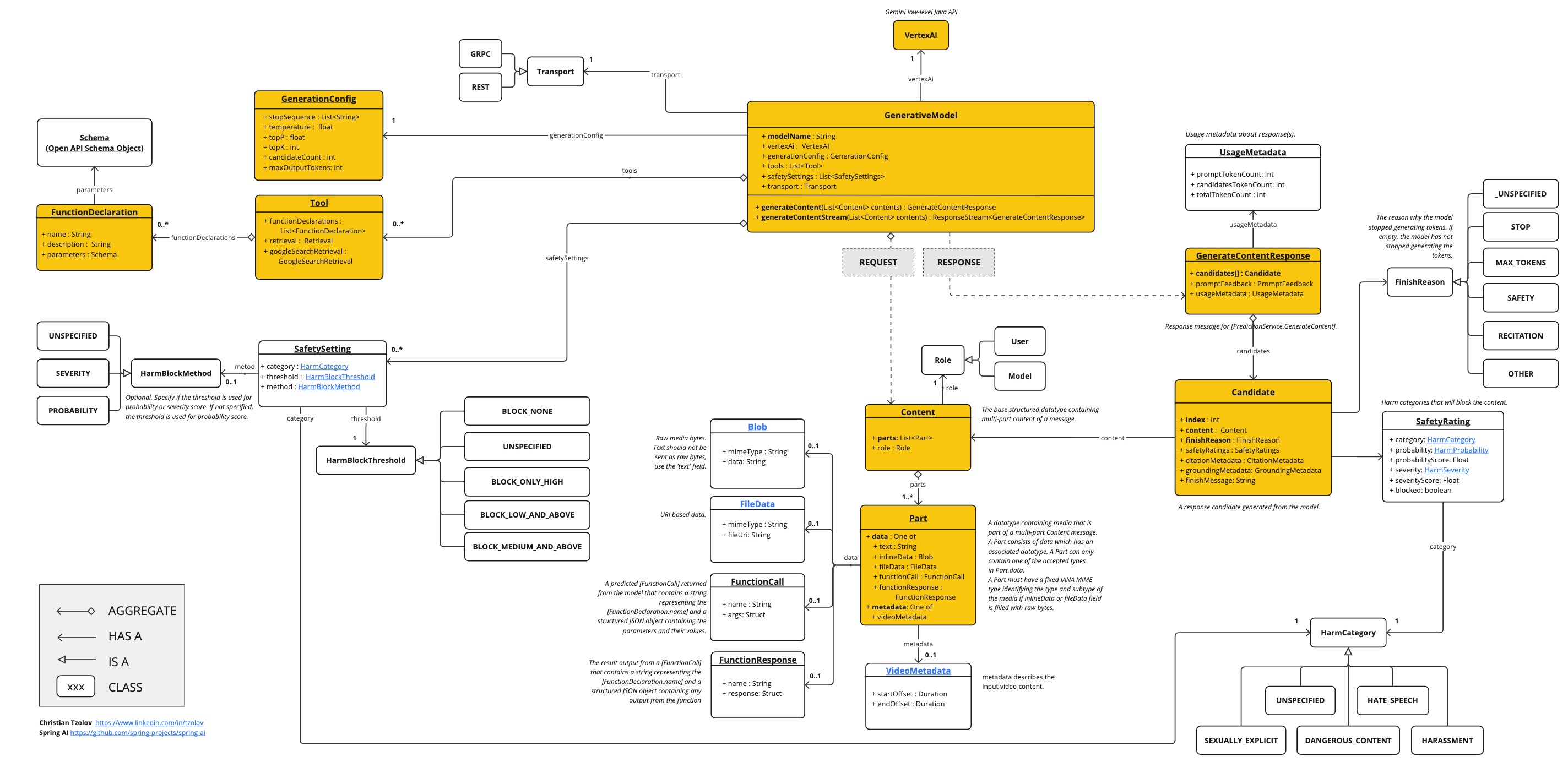

Vertex AI Gemini API 允许开发者使用 Gemini 模型构建生成式 AI 应用程序。 Vertex AI Gemini API 支持多模态提示作为输入,并输出文本或代码。 多模态模型是一种能够处理来自多种模态(包括图像、视频和文本)信息的模型。例如,您可以向模型发送一盘饼干的照片,并要求它提供这些饼干的食谱。 Gemini 是 Google DeepMind 开发的生成式 AI 模型家族,专为多模态用例设计。Gemini API 允许您访问 Gemini 2.0 Flash 和 Gemini 2.0 Flash-Lite。 有关 Vertex AI Gemini API 模型的规范,请参阅 模型信息。 Gemini API 参考

前提条件

-

安装适用于您的操作系统的 gcloud CLI。

-

运行以下命令进行身份验证。 将

PROJECT_ID替换为您的 Google Cloud 项目 ID,将ACCOUNT替换为您的 Google Cloud 用户名。

gcloud config set project <PROJECT_ID> &&

gcloud auth application-default login <ACCOUNT>自动配置

|

Spring AI 自动配置、启动器模块的工件名称发生了重大变化。 请参阅 升级说明 以获取更多信息。 |

Spring AI 为 VertexAI Gemini 聊天客户端提供了 Spring Boot 自动配置。

要启用它,请将以下依赖项添加到您项目的 Maven pom.xml 或 Gradle build.gradle 构建文件中:

-

Maven

-

Gradle

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-vertex-ai-gemini</artifactId>

</dependency>dependencies {

implementation 'org.springframework.ai:spring-ai-starter-model-vertex-ai-gemini'

}|

请参阅 依赖管理 部分,将 Spring AI BOM 添加到您的构建文件中。 |

聊天属性

|

聊天自动配置的启用和禁用现在通过前缀为 |

前缀 spring.ai.vertex.ai.gemini 用作属性前缀,允许您连接到 VertexAI。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.model.chat |

启用聊天模型客户端 |

vertexai |

spring.ai.vertex.ai.gemini.project-id |

Google Cloud Platform 项目 ID |

- |

spring.ai.vertex.ai.gemini.location |

区域 |

- |

spring.ai.vertex.ai.gemini.credentials-uri |

Vertex AI Gemini 凭据的 URI。提供时,用于创建 |

- |

spring.ai.vertex.ai.gemini.api-endpoint |

Vertex AI Gemini API 端点。 |

- |

spring.ai.vertex.ai.gemini.scopes |

- |

|

spring.ai.vertex.ai.gemini.transport |

API 传输。GRPC 或 REST。 |

GRPC |

前缀 spring.ai.vertex.ai.gemini.chat 是属性前缀,允许您配置 VertexAI Gemini 聊天的聊天模型实现。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.vertex.ai.gemini.chat.options.model |

支持的 Vertex AI Gemini 聊天模型 包括 |

gemini-2.0-flash |

spring.ai.vertex.ai.gemini.chat.options.response-mime-type |

生成的候选文本的输出响应 MIME 类型。 |

|

spring.ai.vertex.ai.gemini.chat.options.google-search-retrieval |

使用 Google 搜索接地功能 |

|

spring.ai.vertex.ai.gemini.chat.options.temperature |

控制输出的随机性。值范围为 [0.0,1.0],包含。值越接近 1.0 会产生更多样化的响应,而值越接近 0.0 通常会导致生成器产生较少意外的响应。此值指定后端在调用生成器时使用的默认值。 |

0.7 |

spring.ai.vertex.ai.gemini.chat.options.top-k |

采样时要考虑的最大令牌数。生成器使用 Top-k 和核采样相结合。Top-k 采样考虑 TopK 最可能的令牌集。 |

- |

spring.ai.vertex.ai.gemini.chat.options.top-p |

采样时要考虑的令牌的最大累积概率。生成器使用 Top-k 和核采样相结合。核采样考虑概率和至少为 topP 的最小令牌集。 |

- |

spring.ai.vertex.ai.gemini.chat.options.candidate-count |

要返回的生成响应消息的数量。此值必须在 [1, 8] 之间,包含。默认为 1。 |

1 |

spring.ai.vertex.ai.gemini.chat.options.max-output-tokens |

要生成的最大令牌数。 |

- |

spring.ai.vertex.ai.gemini.chat.options.tool-names |

工具列表,由其名称标识,用于在单个提示请求中启用函数调用。具有这些名称的工具必须存在于 ToolCallback 注册表中。 |

- |

(已弃用,由 |

函数列表,由其名称标识,用于在单个提示请求中启用函数调用。具有这些名称的函数必须存在于 functionCallbacks 注册表中。 |

- |

spring.ai.vertex.ai.gemini.chat.options.internal-tool-execution-enabled |

如果为 true,则应执行工具,否则将模型响应返回给用户。默认值为 null,但如果为 null,则将考虑 |

- |

(已弃用,由 |

如果为 true,Spring AI 将不会在内部处理函数调用,而是将其代理到客户端。然后,客户端负责处理函数调用,将其分派给适当的函数,并返回结果。如果为 false(默认值),Spring AI 将在内部处理函数调用。仅适用于支持函数调用的聊天模型。 |

false |

spring.ai.vertex.ai.gemini.chat.options.safety-settings |

安全设置列表,用于控制安全过滤器,如 Vertex AI 安全过滤器 所定义。每个安全设置可以有方法、阈值和类别。 |

- |

|

所有以 |

运行时选项

VertexAiGeminiChatOptions.java 提供了模型配置,例如温度、topK 等。

启动时,可以使用 VertexAiGeminiChatModel(api, options) 构造函数或 spring.ai.vertex.ai.chat.options.* 属性配置默认选项。

在运行时,您可以通过向 Prompt 调用添加新的、请求特定的选项来覆盖默认选项。

例如,要覆盖特定请求的默认温度:

ChatResponse response = chatModel.call(

new Prompt(

"Generate the names of 5 famous pirates.",

VertexAiGeminiChatOptions.builder()

.temperature(0.4)

.build()

));|

除了模型特定的 |

工具调用

Vertex AI Gemini 模型支持工具调用(在 Google Gemini 上下文中,它被称为 函数调用)功能,允许模型在对话中使用工具。

以下是定义和使用基于 @Tool 的工具的示例:

public class WeatherService {

@Tool(description = "Get the weather in location")

public String weatherByLocation(@ToolParam(description= "City or state name") String location) {

...

}

}

String response = ChatClient.create(this.chatModel)

.prompt("What's the weather like in Boston?")

.tools(new WeatherService())

.call()

.content();您也可以将 java.util.function beans 用作工具:

@Bean

@Description("Get the weather in location. Return temperature in 36°F or 36°C format.")

public Function<Request, Response> weatherFunction() {

return new MockWeatherService();

}

String response = ChatClient.create(this.chatModel)

.prompt("What's the weather like in Boston?")

.toolNames("weatherFunction")

.inputType(Request.class)

.call()

.content();在 工具 文档中查找更多信息。

多模态

多模态是指模型能够同时理解和处理来自各种(输入)源的信息,包括 文本、pdf、图像、音频 和其他数据格式。

图像、音频、视频

Google 的 Gemini AI 模型通过理解和集成文本、代码、音频、图像和视频来支持此功能。 有关更多详细信息,请参阅博客文章 Introducing Gemini。

Spring AI 的 Message 接口通过引入 Media 类型支持多模态 AI 模型。

此类型包含消息中媒体附件的数据和信息,使用 Spring 的 org.springframework.util.MimeType 和 java.lang.Object 作为原始媒体数据。

下面是一个简单的代码示例,摘自 VertexAiGeminiChatModelIT#multiModalityTest(),演示了用户文本与图像的组合。

byte[] data = new ClassPathResource("/vertex-test.png").getContentAsByteArray();

var userMessage = new UserMessage("Explain what do you see on this picture?",

List.of(new Media(MimeTypeUtils.IMAGE_PNG, this.data)));

ChatResponse response = chatModel.call(new Prompt(List.of(this.userMessage)));最新的 Vertex Gemini 支持 PDF 输入类型。

使用 application/pdf 媒体类型将 PDF 文件附加到消息中:

var pdfData = new ClassPathResource("/spring-ai-reference-overview.pdf");

var userMessage = new UserMessage(

"You are a very professional document summarization specialist. Please summarize the given document.",

List.of(new Media(new MimeType("application", "pdf"), pdfData)));

var response = this.chatModel.call(new Prompt(List.of(userMessage)));示例控制器

创建 一个新的 Spring Boot 项目,并将 spring-ai-starter-model-vertex-ai-gemini 添加到您的 pom(或 gradle)依赖项中。

在 src/main/resources 目录下添加一个 application.properties 文件,以启用和配置 VertexAi 聊天模型:

spring.ai.vertex.ai.gemini.project-id=PROJECT_ID

spring.ai.vertex.ai.gemini.location=LOCATION

spring.ai.vertex.ai.gemini.chat.options.model=gemini-2.0-flash

spring.ai.vertex.ai.gemini.chat.options.temperature=0.5|

将 |

|

每个模型都有其自己支持的区域集,您可以在模型页面中找到支持区域列表。

例如,模型= |

这将创建一个 VertexAiGeminiChatModel 实现,您可以将其注入到您的类中。

这是一个简单的 @Controller 类的示例,它使用聊天模型进行文本生成。

@RestController

public class ChatController {

private final VertexAiGeminiChatModel chatModel;

@Autowired

public ChatController(VertexAiGeminiChatModel chatModel) {

this.chatModel = chatModel;

}

@GetMapping("/ai/generate")

public Map generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

return Map.of("generation", this.chatModel.call(message));

}

@GetMapping("/ai/generateStream")

public Flux<ChatResponse> generateStream(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

Prompt prompt = new Prompt(new UserMessage(message));

return this.chatModel.stream(prompt);

}

}手动配置

VertexAiGeminiChatModel 实现了 ChatModel 并使用 VertexAI 连接到 Vertex AI Gemini 服务。

将 spring-ai-vertex-ai-gemini 依赖项添加到您项目的 Maven pom.xml 文件中:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-vertex-ai-gemini</artifactId>

</dependency>或添加到您的 Gradle build.gradle 构建文件中。

dependencies {

implementation 'org.springframework.ai:spring-ai-vertex-ai-gemini'

}|

请参阅 依赖管理 部分,将 Spring AI BOM 添加到您的构建文件中。 |

接下来,创建一个 VertexAiGeminiChatModel 并将其用于文本生成:

VertexAI vertexApi = new VertexAI(projectId, location);

var chatModel = new VertexAiGeminiChatModel(this.vertexApi,

VertexAiGeminiChatOptions.builder()

.model(ChatModel.GEMINI_2_0_FLASH)

.temperature(0.4)

.build());

ChatResponse response = this.chatModel.call(

new Prompt("Generate the names of 5 famous pirates."));VertexAiGeminiChatOptions 提供了聊天请求的配置信息。

VertexAiGeminiChatOptions.Builder 是一个流畅的选项构建器。