聊天模型 API

聊天模型 API 为开发者提供了将 AI 驱动的聊天补全功能集成到其应用程序中的能力。它利用预训练语言模型(如 GPT - Generative Pre-trained Transformer)来生成对用户自然语言输入的类人响应。

该 API 通常通过向 AI 模型发送提示或部分对话来工作,然后模型根据其训练数据和对自然语言模式的理解生成对话的补全或延续。然后将完整的响应返回给应用程序,应用程序可以将其呈现给用户或用于进一步处理。

Spring AI 聊天模型 API 旨在成为一个简单且可移植的接口,用于与各种 AI 模型 交互,允许开发者在不同模型之间切换,只需最少的代码更改。

这种设计与 Spring 的模块化和可互换性理念相符。

此外,借助 Prompt 用于输入封装和 ChatResponse 用于输出处理等辅助类,聊天模型 API 统一了与 AI 模型的通信。

它管理请求准备和响应解析的复杂性,提供直接简化的 API 交互。

您可以在 可用实现 部分找到更多关于可用实现的信息,并在 聊天模型比较 部分找到详细的比较。

API 概述

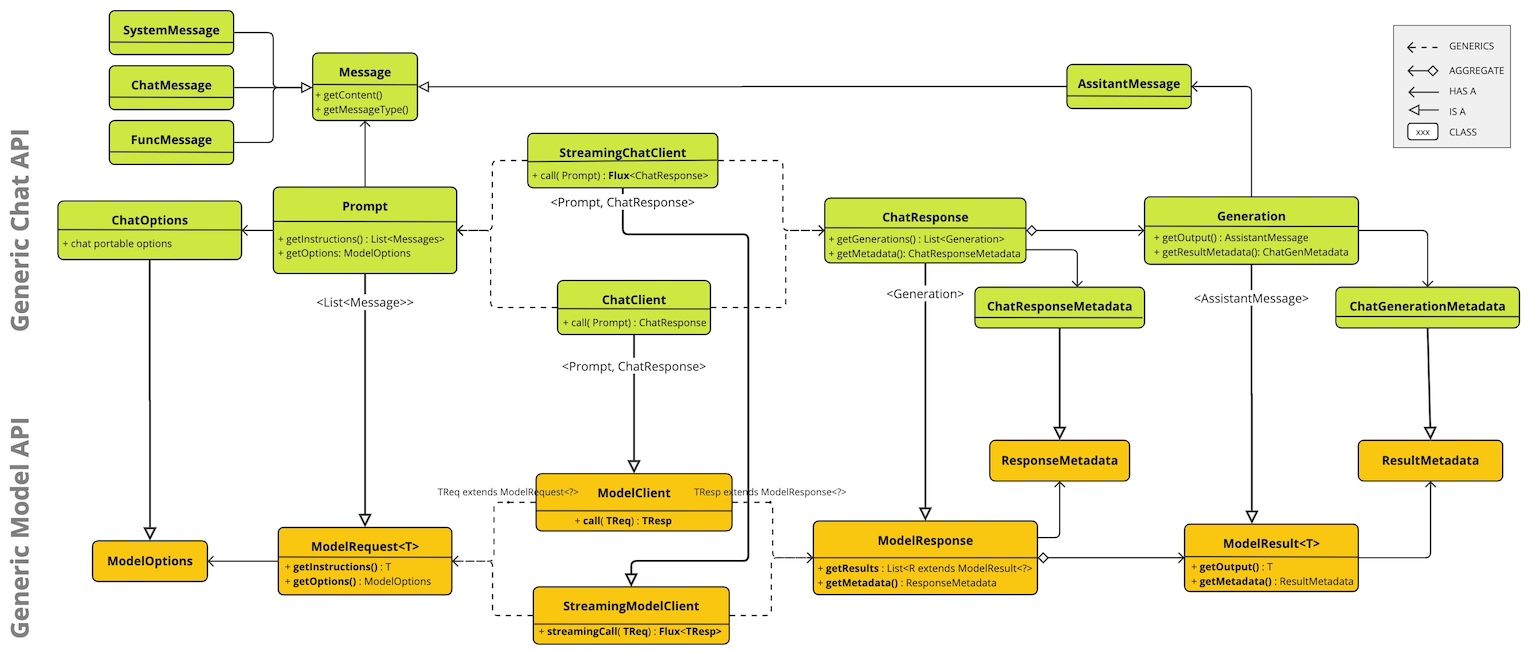

本节提供了 Spring AI 聊天模型 API 接口和相关类的指南。

ChatModel

以下是 ChatModel 接口定义:

public interface ChatModel extends Model<Prompt, ChatResponse>, StreamingChatModel {

default String call(String message) {...}

@Override

ChatResponse call(Prompt prompt);

}带有 String 参数的 call() 方法简化了初始使用,避免了更复杂的 Prompt 和 ChatResponse 类的复杂性。

在实际应用中,更常见的是使用接受 Prompt 实例并返回 ChatResponse 的 call() 方法。

StreamingChatModel

以下是 StreamingChatModel 接口定义:

public interface StreamingChatModel extends StreamingModel<Prompt, ChatResponse> {

default Flux<String> stream(String message) {...}

@Override

Flux<ChatResponse> stream(Prompt prompt);

}stream() 方法接受 String 或 Prompt 参数,类似于 ChatModel,但它使用响应式 Flux API 流式传输响应。

Prompt

public class Prompt implements ModelRequest<List<Message>> {

private final List<Message> messages;

private ChatOptions modelOptions;

@Override

public ChatOptions getOptions() {...}

@Override

public List<Message> getInstructions() {...}

// constructors and utility methods omitted

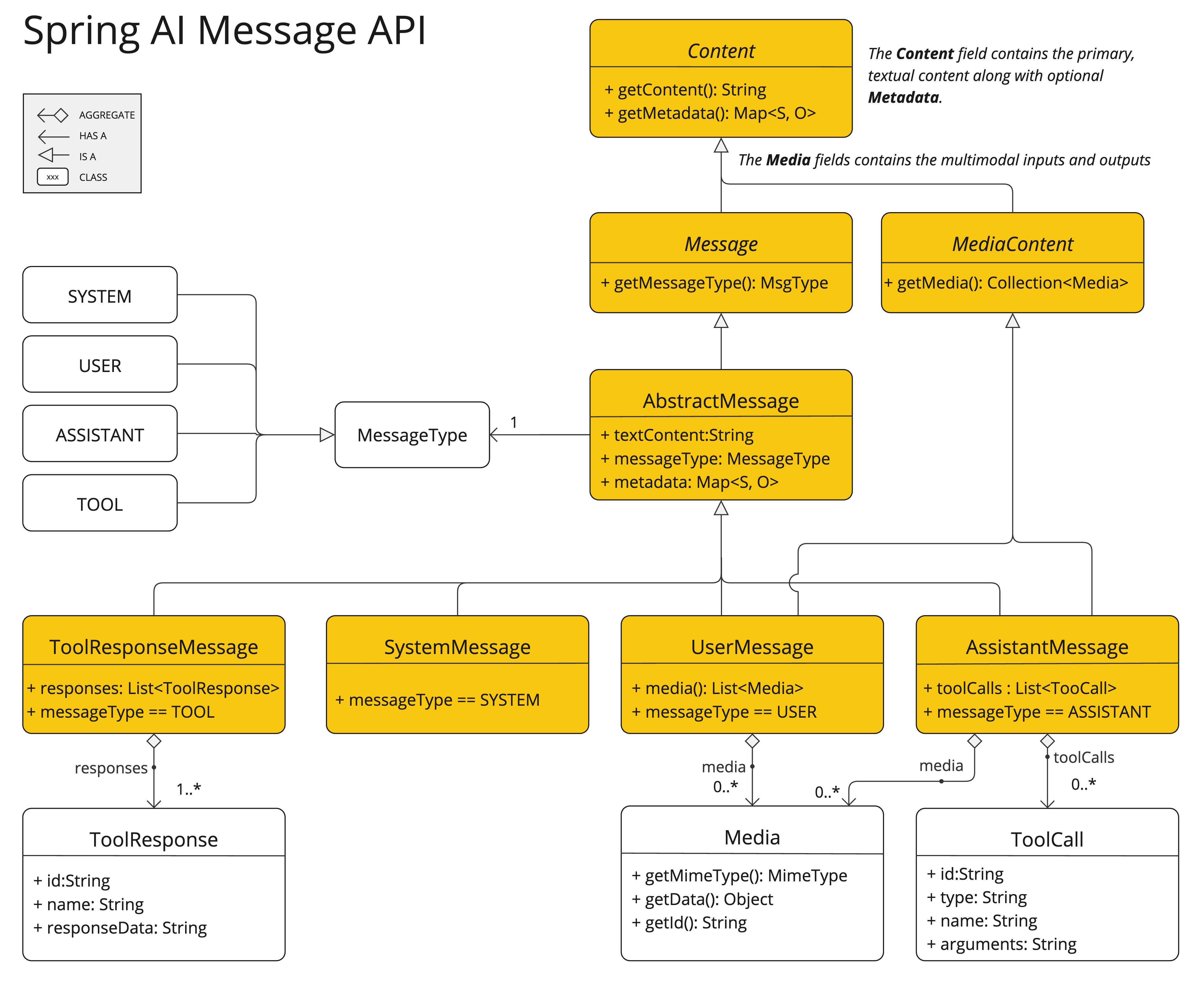

}Message

Message 接口封装了 Prompt 的文本内容、元数据属性集合以及称为 MessageType 的分类。

接口定义如下:

public interface Content {

String getText();

Map<String, Object> getMetadata();

}

public interface Message extends Content {

MessageType getMessageType();

}多模态消息类型还实现了 MediaContent 接口,提供 Media 内容对象的列表。

public interface MediaContent extends Content {

Collection<Media> getMedia();

}Message 接口有各种实现,对应于 AI 模型可以处理的消息类别:

聊天补全端点根据对话角色区分消息类别,通过 MessageType 有效映射。

例如,OpenAI 识别用于不同对话角色的消息类别,例如 system、user、function 或 assistant。

虽然术语 MessageType 可能暗示特定的消息格式,但在这种情况下,它有效地指定了消息在对话中扮演的角色。

对于不使用特定角色的 AI 模型,UserMessage 实现充当标准类别,通常表示用户生成查询或指令。

要了解 Prompt 和 Message 之间的实际应用和关系,特别是在这些角色或消息类别的上下文中,请参阅 提示 部分中的详细解释。

聊天选项

表示可以传递给 AI 模型的选项。ChatOptions 类是 ModelOptions 的子类,用于定义可以传递给 AI 模型的少数可移植选项。

ChatOptions 类定义如下:

public interface ChatOptions extends ModelOptions {

String getModel();

Float getFrequencyPenalty();

Integer getMaxTokens();

Float getPresencePenalty();

List<String> getStopSequences();

Float getTemperature();

Integer getTopK();

Float getTopP();

ChatOptions copy();

}此外,每个特定模型的 ChatModel/StreamingChatModel 实现都可以有自己的选项,这些选项可以传递给 AI 模型。例如,OpenAI 聊天补全模型有自己的选项,如 logitBias、seed 和 user。

这是一个强大的功能,允许开发者在启动应用程序时使用模型特定的选项,然后使用 Prompt 请求在运行时覆盖它们。

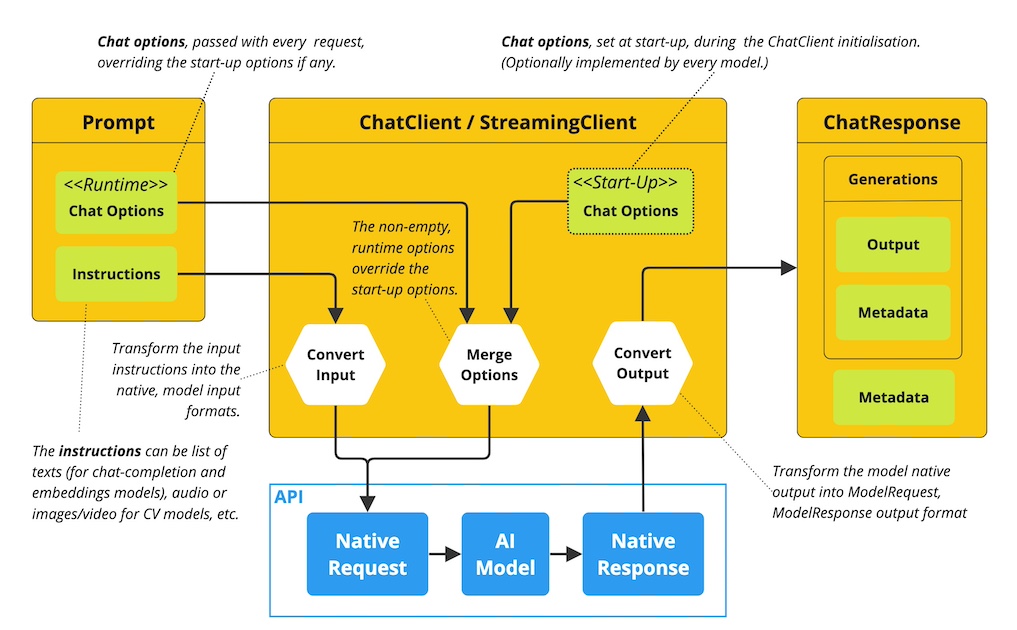

Spring AI 提供了一个复杂的系统来配置和使用聊天模型。 它允许在启动时设置默认配置,同时还提供了在每个请求基础上覆盖这些设置的灵活性。 这种方法使开发者能够轻松地使用不同的 AI 模型并根据需要调整参数,所有这些都在 Spring AI 框架提供的一致接口中进行。

以下流程图说明了 Spring AI 如何处理聊天模型的配置和执行,结合了启动和运行时选项:

-

启动配置 - ChatModel/StreamingChatModel 使用“启动”聊天选项初始化。 这些选项在 ChatModel 初始化期间设置,旨在提供默认配置。

-

运行时配置 - 对于每个请求,Prompt 可以包含运行时聊天选项:这些可以覆盖启动选项。

-

选项合并过程 - “合并选项”步骤结合了启动和运行时选项。 如果提供了运行时选项,它们将优先于启动选项。

-

输入处理 - “转换输入”步骤将输入指令转换为本机、模型特定的格式。

-

输出处理 - “转换输出”步骤将模型的响应转换为标准化的

ChatResponse格式。

启动和运行时选项的分离允许全局配置和请求特定调整。

ChatResponse

ChatResponse 类的结构如下:

public class ChatResponse implements ModelResponse<Generation> {

private final ChatResponseMetadata chatResponseMetadata;

private final List<Generation> generations;

@Override

public ChatResponseMetadata getMetadata() {...}

@Override

public List<Generation> getResults() {...}

// other methods omitted

}ChatResponse 类保存 AI 模型的输出,每个 Generation 实例包含单个提示可能产生的多个输出之一。

ChatResponse 类还携带关于 AI 模型响应的 ChatResponseMetadata 元数据。

Generation

最后,Generation 类从 ModelResult 扩展,表示模型输出(助手消息)和相关元数据:

public class Generation implements ModelResult<AssistantMessage> {

private final AssistantMessage assistantMessage;

private ChatGenerationMetadata chatGenerationMetadata;

@Override

public AssistantMessage getOutput() {...}

@Override

public ChatGenerationMetadata getMetadata() {...}

// other methods omitted

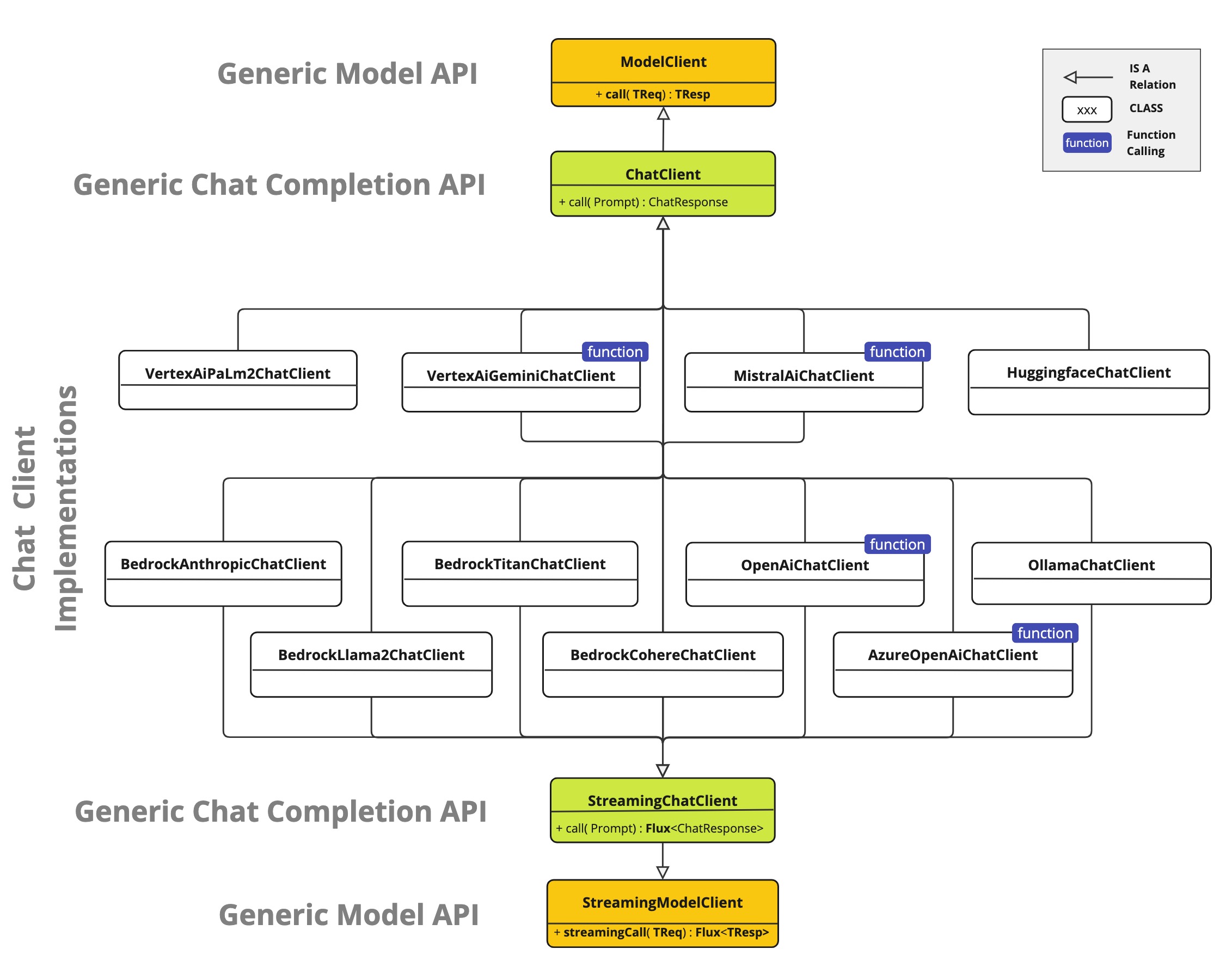

}可用实现

此图说明了统一接口 ChatModel 和 StreamingChatModel 如何用于与来自不同提供商的各种 AI 聊天模型进行交互,从而实现轻松集成和在不同 AI 服务之间切换,同时为客户端应用程序维护一致的 API。

-

OpenAI 聊天补全(流式传输、多模态和函数调用支持)

-

Microsoft Azure Open AI 聊天补全(流式传输和函数调用支持)

-

Ollama 聊天补全(流式传输、多模态和函数调用支持)

-

Hugging Face 聊天补全(不支持流式传输)

-

Google Vertex AI Gemini 聊天补全(流式传输、多模态和函数调用支持)

-

Mistral AI 聊天补全(流式传输和函数调用支持)

-

Anthropic 聊天补全(流式传输和函数调用支持)

|

在 聊天模型比较 部分查找可用聊天模型的详细比较。 |