DeepSeek Chat

Spring AI 支持 DeepSeek 的各种 AI 语言模型。你可以与 DeepSeek 语言模型交互,并基于 DeepSeek 模型创建多语言对话助手。

先决条件

你需要创建 DeepSeek API 密钥才能访问 DeepSeek 语言模型。

在 DeepSeek 注册页面 创建账户,并在 API 密钥页面 生成令牌。

Spring AI 项目定义了一个名为 spring.ai.deepseek.api-key 的配置属性,你应该将其设置为从 API 密钥页面获取的 API Key 值。

你可以在 application.properties 文件中设置此配置属性:

spring.ai.deepseek.api-key=<your-deepseek-api-key>为了在处理 API 密钥等敏感信息时增强安全性,你可以使用 Spring Expression Language (SpEL) 引用自定义环境变量:

# 在 application.yml 中

spring:

ai:

deepseek:

api-key: ${DEEPSEEK_API_KEY}# 在你的环境或 .env 文件中

export DEEPSEEK_API_KEY=<your-deepseek-api-key>你也可以在应用程序代码中以编程方式设置此配置:

// 从安全源或环境变量中检索 API 密钥

String apiKey = System.getenv("DEEPSEEK_API_KEY");自动配置

Spring AI 为 DeepSeek 聊天模型提供了 Spring Boot 自动配置。

要启用它,请将以下依赖项添加到你的项目的 Maven pom.xml 文件中:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-deepseek</artifactId>

</dependency>或者添加到你的 Gradle build.gradle 文件中。

dependencies {

implementation 'org.springframework.ai:spring-ai-starter-model-deepseek'

}|

请参阅 依赖管理 部分,将 Spring AI BOM 添加到你的构建文件中。 |

聊天属性

重试属性

前缀 spring.ai.retry 用作属性前缀,允许你配置 DeepSeek 聊天模型的重试机制。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.retry.max-attempts |

最大重试次数。 |

10 |

spring.ai.retry.backoff.initial-interval |

指数退避策略的初始休眠持续时间。 |

2 秒 |

spring.ai.retry.backoff.multiplier |

退避间隔乘数。 |

5 |

spring.ai.retry.backoff.max-interval |

最大退避持续时间。 |

3 分钟 |

spring.ai.retry.on-client-errors |

如果为 false,则抛出 NonTransientAiException,并且不会尝试重试 |

false |

spring.ai.retry.exclude-on-http-codes |

不应触发重试的 HTTP 状态代码列表(例如,抛出 NonTransientAiException)。 |

空 |

spring.ai.retry.on-http-codes |

应触发重试的 HTTP 状态代码列表(例如,抛出 TransientAiException)。 |

空 |

连接属性

前缀 spring.ai.deepseek 用作属性前缀,允许你连接到 DeepSeek。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.deepseek.base-url |

要连接的 URL |

[role="bare"][role="bare"][role="bare"]https://api.deepseek.com |

spring.ai.deepseek.api-key |

API 密钥 |

- |

配置属性

前缀 spring.ai.deepseek.chat 是属性前缀,允许你配置 DeepSeek 的聊天模型实现。

| 属性 | 描述 | 默认值 |

|---|---|---|

spring.ai.deepseek.chat.enabled |

启用 DeepSeek 聊天模型。 |

true |

spring.ai.deepseek.chat.base-url |

可选覆盖 spring.ai.deepseek.base-url 以提供特定于聊天的 URL |

[role="bare"][role="bare"][role="bare"]https://api.deepseek.com/ |

spring.ai.deepseek.chat.api-key |

可选覆盖 spring.ai.deepseek.api-key 以提供特定于聊天的 API 密钥 |

- |

spring.ai.deepseek.chat.completions-path |

聊天完成端点的路径 |

/chat/completions |

spring.ai.deepseek.chat.beta-prefix-path |

Beta 功能端点的路径前缀 |

/beta |

spring.ai.deepseek.chat.options.model |

要使用的模型 ID。你可以使用 deepseek-reasoner 或 deepseek-chat。 |

deepseek-chat |

spring.ai.deepseek.chat.options.frequencyPenalty |

介于 -2.0 和 2.0 之间的数字。正值会根据新令牌在文本中出现的频率来惩罚它们,从而降低模型重复相同行的可能性。 |

0.0f |

spring.ai.deepseek.chat.options.maxTokens |

聊天完成中要生成的最大令牌数。输入令牌和生成令牌的总长度受模型的上下文长度限制。 |

- |

spring.ai.deepseek.chat.options.presencePenalty |

介于 -2.0 和 2.0 之间的数字。正值会根据新令牌是否出现在文本中来惩罚它们,从而增加模型谈论新主题的可能性。 |

0.0f |

spring.ai.deepseek.chat.options.stop |

最多 4 个序列,API 将在此处停止生成更多令牌。 |

- |

spring.ai.deepseek.chat.options.temperature |

要使用的采样温度,介于 0 和 2 之间。较高的值(如 0.8)会使输出更随机,而较低的值(如 0.2)会使其更集中和确定。我们通常建议更改此项或 top_p,但不要同时更改两者。 |

1.0F |

spring.ai.deepseek.chat.options.topP |

采样温度的替代方法,称为核采样,模型会考虑具有 top_p 概率质量的令牌的结果。因此,0.1 意味着只考虑构成前 10% 概率质量的令牌。我们通常建议更改此项或温度,但不要同时更改两者。 |

1.0F |

spring.ai.deepseek.chat.options.logprobs |

是否返回输出令牌的对数概率。如果为 true,则返回消息内容中每个输出令牌的对数概率。 |

- |

spring.ai.deepseek.chat.options.topLogprobs |

一个介于 0 和 20 之间的整数,指定在每个令牌位置返回的最可能令牌的数量,每个令牌都具有关联的对数概率。如果使用此参数,则 logprobs 必须设置为 true。 |

- |

|

你可以为 |

|

所有以 |

运行时选项

DeepSeekChatOptions.java 提供了模型配置,例如要使用的模型、温度、频率惩罚等。

在启动时,可以使用 DeepSeekChatModel(api, options) 构造函数或 spring.ai.deepseek.chat.options.* 属性配置默认选项。

在运行时,你可以通过向 Prompt 调用添加新的、请求特定的选项来覆盖默认选项。

例如,要为特定请求覆盖默认模型和温度:

ChatResponse response = chatModel.call(

new Prompt(

"Generate the names of 5 famous pirates. Please provide the JSON response without any code block markers such as ```json```.",

DeepSeekChatOptions.builder()

.withModel(DeepSeekApi.ChatModel.DEEPSEEK_CHAT.getValue())

.withTemperature(0.8f)

.build()

));|

除了模型特定的 DeepSeekChatOptions 之外,你可以使用通过 ChatOptions#builder() 创建的便携式 ChatOptions 实例。 |

示例控制器(自动配置)

创建一个新的 Spring Boot 项目,并将 spring-ai-starter-model-deepseek 添加到你的 pom(或 gradle)依赖项中。

在 src/main/resources 目录下添加一个 application.properties 文件,以启用和配置 DeepSeek 聊天模型:

spring.ai.deepseek.api-key=YOUR_API_KEY

spring.ai.deepseek.chat.options.model=deepseek-chat

spring.ai.deepseek.chat.options.temperature=0.8|

将 |

这将创建一个 DeepSeekChatModel 实现,你可以将其注入到你的类中。

这是一个使用聊天模型进行文本生成的简单 @Controller 类示例。

@RestController

public class ChatController {

private final DeepSeekChatModel chatModel;

@Autowired

public ChatController(DeepSeekChatModel chatModel) {

this.chatModel = chatModel;

}

@GetMapping("/ai/generate")

public Map generate(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

return Map.of("generation", chatModel.call(message));

}

@GetMapping("/ai/generateStream")

public Flux<ChatResponse> generateStream(@RequestParam(value = "message", defaultValue = "Tell me a joke") String message) {

var prompt = new Prompt(new UserMessage(message));

return chatModel.stream(prompt);

}

}聊天前缀补全

聊天前缀补全遵循聊天补全 API,用户提供助手的消息前缀,模型来补全消息的其余部分。

使用前缀补全时,用户必须确保消息列表中的最后一条消息是 DeepSeekAssistantMessage。

下面是聊天前缀补全的完整 Java 代码示例。在此示例中,我们将助手的消息前缀设置为 "``python\n",以强制模型输出 Python 代码,并将停止参数设置为 ['`’] 以防止模型提供额外的解释。

@RestController

public class CodeGenerateController {

private final DeepSeekChatModel chatModel;

@Autowired

public ChatController(DeepSeekChatModel chatModel) {

this.chatModel = chatModel;

}

@GetMapping("/ai/generatePythonCode")

public String generate(@RequestParam(value = "message", defaultValue = "Please write quick sort code") String message) {

UserMessage userMessage = new UserMessage(message);

Message assistantMessage = DeepSeekAssistantMessage.prefixAssistantMessage("```python\\n");

Prompt prompt = new Prompt(List.of(userMessage, assistantMessage), ChatOptions.builder().stopSequences(List.of("```")).build());

ChatResponse response = chatModel.call(prompt);

return response.getResult().getOutput().getText();

}

}推理模型 (deepseek-reasoner)

deepseek-reasoner 是 DeepSeek 开发的推理模型。在提供最终答案之前,模型首先生成一个思维链 (CoT) 以提高其响应的准确性。我们的 API 允许用户访问 deepseek-reasoner 生成的 CoT 内容,使他们能够查看、显示和提取它。

你可以使用 DeepSeekAssistantMessage 来获取 deepseek-reasoner 生成的 CoT 内容。

public void deepSeekReasonerExample() {

DeepSeekChatOptions promptOptions = DeepSeekChatOptions.builder()

.model(DeepSeekApi.ChatModel.DEEPSEEK_REASONER.getValue())

.build();

Prompt prompt = new Prompt("9.11 and 9.8, which is greater?", promptOptions);

ChatResponse response = chatModel.call(prompt);

// 获取 deepseek-reasoner 生成的 CoT 内容,仅在使用 deepseek-reasoner 模型时可用

DeepSeekAssistantMessage deepSeekAssistantMessage = (DeepSeekAssistantMessage) response.getResult().getOutput();

String reasoningContent = deepSeekAssistantMessage.getReasoningContent();

String text = deepSeekAssistantMessage.getText();

}推理模型多轮对话

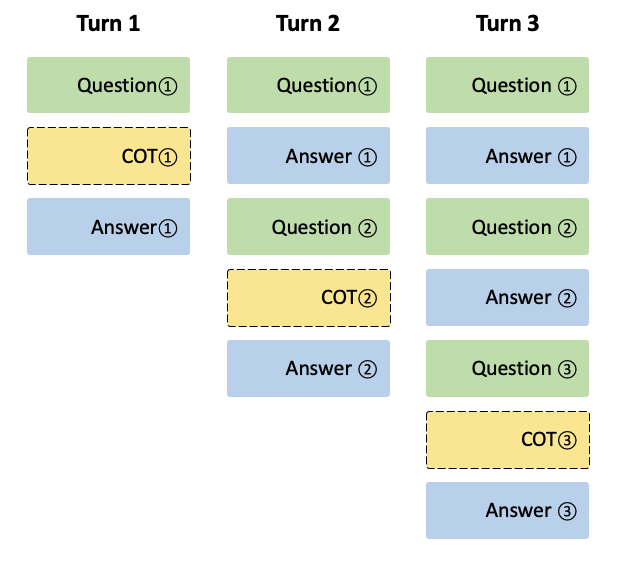

在对话的每一轮中,模型输出 CoT (reasoning_content) 和最终答案 (content)。在下一轮对话中,前几轮的 CoT 不会连接到上下文中,如下图所示:

请注意,如果输入消息序列中包含 reasoning_content 字段,API 将返回 400 错误。因此,在发出 API 请求之前,您应该从 API 响应中删除 reasoning_content 字段,如 API 示例所示。

public String deepSeekReasonerMultiRoundExample() {

List<Message> messages = new ArrayList<>();

messages.add(new UserMessage("9.11 and 9.8, which is greater?"));

DeepSeekChatOptions promptOptions = DeepSeekChatOptions.builder()

.model(DeepSeekApi.ChatModel.DEEPSEEK_REASONER.getValue())

.build();

Prompt prompt = new Prompt(messages, promptOptions);

ChatResponse response = chatModel.call(prompt);

DeepSeekAssistantMessage deepSeekAssistantMessage = (DeepSeekAssistantMessage) response.getResult().getOutput();

String reasoningContent = deepSeekAssistantMessage.getReasoningContent();

String text = deepSeekAssistantMessage.getText();

messages.add(new AssistantMessage(Objects.requireNonNull(text)));

messages.add(new UserMessage("How many Rs are there in the word 'strawberry'?"));

Prompt prompt2 = new Prompt(messages, promptOptions);

ChatResponse response2 = chatModel.call(prompt2);

DeepSeekAssistantMessage deepSeekAssistantMessage2 = (DeepSeekAssistantMessage) response2.getResult().getOutput();

String reasoningContent2 = deepSeekAssistantMessage2.getReasoningContent();

return deepSeekAssistantMessage2.getText();

}手动配置

DeepSeekChatModel 实现了 ChatModel 和 StreamingChatModel,并使用 低级 DeepSeekApi 客户端 连接到 DeepSeek 服务。

将 spring-ai-deepseek 依赖项添加到你的项目的 Maven pom.xml 文件中:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-deepseek</artifactId>

</dependency>或者添加到你的 Gradle build.gradle 文件中。

dependencies {

implementation 'org.springframework.ai:spring-ai-deepseek'

}|

请参阅 依赖管理 部分,将 Spring AI BOM 添加到你的构建文件中。 |

接下来,创建 DeepSeekChatModel 并将其用于文本生成:

var deepSeekApi = new DeepSeekApi(System.getenv("DEEPSEEK_API_KEY"));

var chatModel = new DeepSeekChatModel(deepSeekApi, DeepSeekChatOptions.builder()

.withModel(DeepSeekApi.ChatModel.DEEPSEEK_CHAT.getValue())

.withTemperature(0.4f)

.withMaxTokens(200)

.build());

ChatResponse response = chatModel.call(

new Prompt("Generate the names of 5 famous pirates."));

// 或者使用流式响应

Flux<ChatResponse> streamResponse = chatModel.stream(

new Prompt("Generate the names of 5 famous pirates."));DeepSeekChatOptions 提供聊天请求的配置信息。

DeepSeekChatOptions.Builder 是一个流式选项构建器。

低级 DeepSeekApi 客户端

DeepSeekApi 是 DeepSeek API 的轻量级 Java 客户端。

这是一个以编程方式使用 API 的简单代码片段:

DeepSeekApi deepSeekApi =

new DeepSeekApi(System.getenv("DEEPSEEK_API_KEY"));

ChatCompletionMessage chatCompletionMessage =

new ChatCompletionMessage("Hello world", Role.USER);

// 同步请求

ResponseEntity<ChatCompletion> response = deepSeekApi.chatCompletionEntity(

new ChatCompletionRequest(List.of(chatCompletionMessage), DeepSeekApi.ChatModel.DEEPSEEK_CHAT.getValue(), 0.7, false));

// 流式请求

Flux<ChatCompletionChunk> streamResponse = deepSeekApi.chatCompletionStream(

new ChatCompletionRequest(List.of(chatCompletionMessage), DeepSeekApi.ChatModel.DEEPSEEK_CHAT.getValue(), 0.7, true));有关详细信息,请遵循 DeepSeekApi.java 的 JavaDoc。

DeepSeekApi 示例

-

DeepSeekApiIT.java 测试提供了一些如何使用轻量级库的通用示例。