ETL 管道

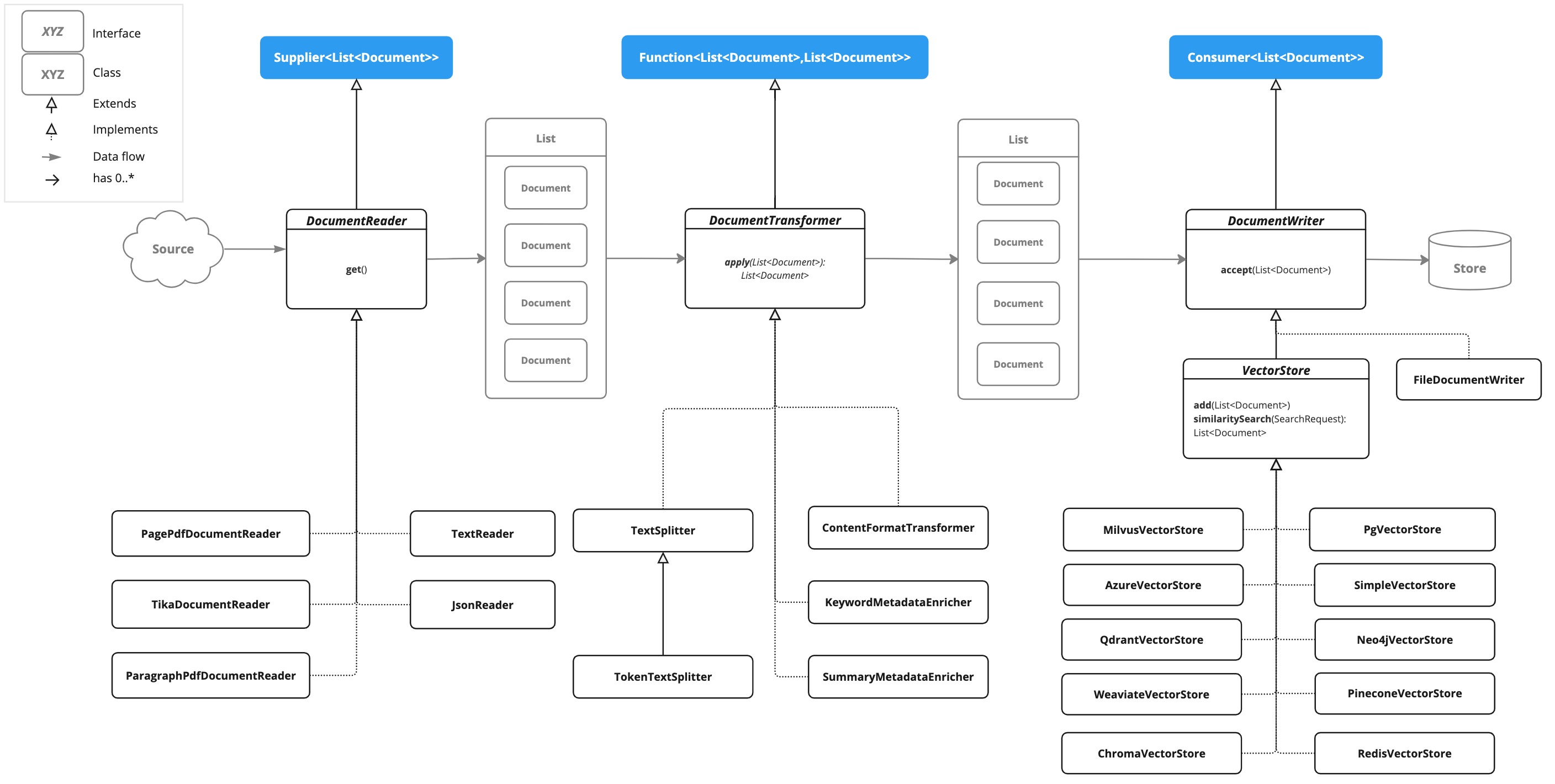

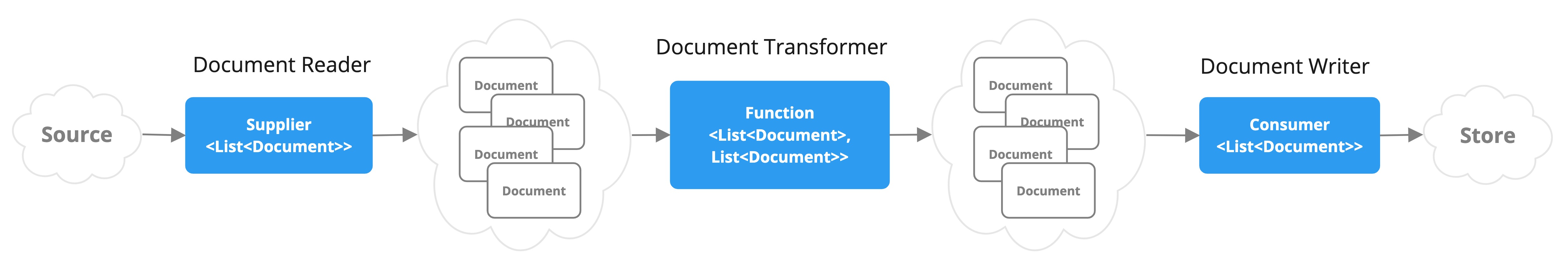

提取、转换和加载 (ETL) 框架是检索增强生成 (RAG) 用例中数据处理的支柱。 ETL 管道协调从原始数据源到结构化向量存储的数据流,确保数据以最佳格式供 AI 模型检索。 RAG 用例通过从数据主体中检索相关信息来增强生成模型的能力,从而提高生成输出的质量和相关性。

API 概述

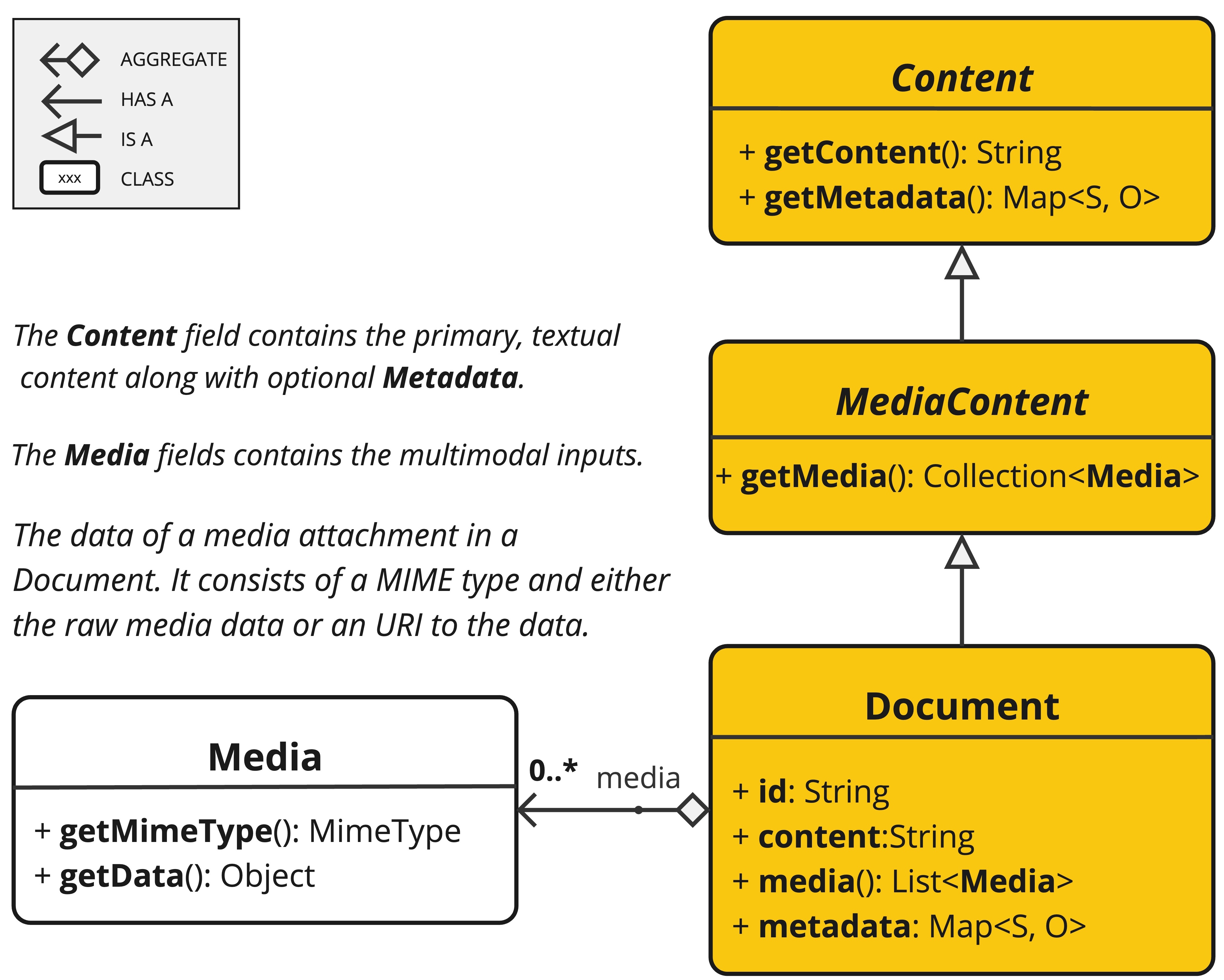

ETL 管道创建、转换和存储 Document 实例。

Document 类包含文本、元数据以及可选的附加媒体类型,如图像、音频和视频。

ETL 管道有三个主要组件:

-

DocumentReader,实现Supplier<List<Document>> -

DocumentTransformer,实现Function<List<Document>, List<Document>> -

DocumentWriter,实现Consumer<List<Document>>

Document 类的内容是通过 DocumentReader 从 PDF、文本文件和其他文档类型创建的。

要构建一个简单的 ETL 管道,您可以将每种类型的一个实例串联起来。

假设我们有以下三个 ETL 类型的实例:

-

PagePdfDocumentReader,DocumentReader的一个实现 -

TokenTextSplitter,DocumentTransformer的一个实现 -

VectorStore,DocumentWriter的一个实现

要执行将数据加载到向量数据库以用于检索增强生成模式的基本操作,请在 Java 函数式语法中使用以下代码。

vectorStore.accept(tokenTextSplitter.apply(pdfReader.get()));或者,您可以使用更自然地表达该领域的方法名称

vectorStore.write(tokenTextSplitter.split(pdfReader.read()));ETL 接口

ETL 管道由以下接口和实现组成。 详细的 ETL 类图显示在 ETL 类图 部分。

DocumentReader

提供来自不同来源的文档源。

public interface DocumentReader extends Supplier<List<Document>> {

default List<Document> read() {

return get();

}

}DocumentTransformer

作为处理工作流的一部分,转换一批文档。

public interface DocumentTransformer extends Function<List<Document>, List<Document>> {

default List<Document> transform(List<Document> transform) {

return apply(transform);

}

}DocumentReaders

JSON

JsonReader 处理 JSON 文档,将其转换为 Document 对象列表。

示例

@Component

class MyJsonReader {

private final Resource resource;

MyJsonReader(@Value("classpath:bikes.json") Resource resource) {

this.resource = resource;

}

List<Document> loadJsonAsDocuments() {

JsonReader jsonReader = new JsonReader(this.resource, "description", "content");

return jsonReader.get();

}

}构造函数选项

JsonReader 提供以下几个构造函数选项:

-

JsonReader(Resource resource) -

JsonReader(Resource resource, String… jsonKeysToUse) -

JsonReader(Resource resource, JsonMetadataGenerator jsonMetadataGenerator, String… jsonKeysToUse)

参数

-

resource:指向 JSON 文件的 SpringResource对象。 -

jsonKeysToUse:JSON 中应作为结果Document对象中文本内容的键数组。 -

jsonMetadataGenerator:一个可选的JsonMetadataGenerator,用于为每个Document创建元数据。

行为

JsonReader 按如下方式处理 JSON 内容:

-

它可以处理 JSON 数组和单个 JSON 对象。

-

对于每个 JSON 对象(无论是数组中的还是单个对象):

-

它根据指定的

jsonKeysToUse提取内容。 -

如果未指定键,它将整个 JSON 对象用作内容。

-

它使用提供的

JsonMetadataGenerator(如果未提供则使用空生成器)生成元数据。 -

它创建一个包含提取内容和元数据的

Document对象。

-

使用 JSON 指针

JsonReader 现在支持使用 JSON 指针检索 JSON 文档的特定部分。此功能允许您轻松地从复杂的 JSON 结构中提取嵌套数据。

示例 JSON 结构

[

{

"id": 1,

"brand": "Trek",

"description": "A high-performance mountain bike for trail riding."

},

{

"id": 2,

"brand": "Cannondale",

"description": "An aerodynamic road bike for racing enthusiasts."

}

]在此示例中,如果 JsonReader 配置为使用 "description" 作为 jsonKeysToUse,它将创建 Document 对象,其中内容是数组中每辆自行车的 "description" 字段的值。

文本

TextReader 处理纯文本文档,将其转换为 Document 对象列表。

示例

@Component

class MyTextReader {

private final Resource resource;

MyTextReader(@Value("classpath:text-source.txt") Resource resource) {

this.resource = resource;

}

List<Document> loadText() {

TextReader textReader = new TextReader(this.resource);

textReader.getCustomMetadata().put("filename", "text-source.txt");

return textReader.read();

}

}配置

-

setCharset(Charset charset):设置用于读取文本文件的字符集。默认为 UTF-8。 -

getCustomMetadata():返回一个可变映射,您可以在其中为文档添加自定义元数据。

行为

TextReader 按如下方式处理文本内容:

-

它将文本文件的全部内容读取到一个

Document对象中。 -

文件内容成为

Document的内容。 -

元数据会自动添加到

Document中:-

charset:用于读取文件的字符集(默认值:“UTF-8”)。 -

source:源文本文件的文件名。

-

-

通过

getCustomMetadata()添加的任何自定义元数据都包含在Document中。

注意事项

-

TextReader将整个文件内容读取到内存中,因此可能不适用于非常大的文件。 -

如果需要将文本拆分为更小的块,可以在读取文档后使用文本拆分器,例如

TokenTextSplitter:

List<Document> documents = textReader.get();

List<Document> splitDocuments = new TokenTextSplitter().apply(this.documents);-

读取器使用 Spring 的

Resource抽象,允许它从各种来源(类路径、文件系统、URL 等)读取。 -

可以使用

getCustomMetadata()方法将自定义元数据添加到读取器创建的所有文档中。

HTML (JSoup)

JsoupDocumentReader 使用 JSoup 库处理 HTML 文档,将其转换为 Document 对象列表。

示例

@Component

class MyHtmlReader {

private final Resource resource;

MyHtmlReader(@Value("classpath:/my-page.html") Resource resource) {

this.resource = resource;

}

List<Document> loadHtml() {

JsoupDocumentReaderConfig config = JsoupDocumentReaderConfig.builder()

.selector("article p") // 提取 <article> 标签内的段落

.charset("ISO-8859-1") // 使用 ISO-8859-1 编码

.includeLinkUrls(true) // 在元数据中包含链接 URL

.metadataTags(List.of("author", "date")) // 提取 author 和 date meta 标签

.additionalMetadata("source", "my-page.html") // 添加自定义元数据

.build();

JsoupDocumentReader reader = new JsoupDocumentReader(this.resource, config);

return reader.get();

}

}JsoupDocumentReaderConfig 允许您自定义 JsoupDocumentReader 的行为:

-

charset:指定 HTML 文档的字符编码(默认为 "UTF-8")。 -

selector:一个 JSoup CSS 选择器,用于指定从哪些元素中提取文本(默认为 "body")。 -

separator:用于连接来自多个选定元素的文本的字符串(默认为 "\n")。 -

allElements:如果为true,则从<body>元素中提取所有文本,忽略selector(默认为false)。 -

groupByElement:如果为true,则为selector匹配的每个元素创建一个单独的Document(默认为false)。 -

includeLinkUrls:如果为true,则提取绝对链接 URL 并将其添加到元数据中(默认为false)。 -

metadataTags:要从中提取内容的<meta>标签名称列表(默认为["description", "keywords"])。 -

additionalMetadata:允许您向所有创建的Document对象添加自定义元数据。

示例文档:my-page.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>My Web Page</title>

<meta name="description" content="A sample web page for Spring AI">

<meta name="keywords" content="spring, ai, html, example">

<meta name="author" content="John Doe">

<meta name="date" content="2024-01-15">

<link rel="stylesheet" href="style.css">

</head>

<body>

<header>

<h1>Welcome to My Page</h1>

</header>

<nav>

<ul>

<li><a href="/">Home</a></li>

<li><a href="/about">About</a></li>

</ul>

</nav>

<article>

<h2>Main Content</h2>

<p>This is the main content of my web page.</p>

<p>It contains multiple paragraphs.</p>

<a href="https://www.example.com">External Link</a>

</article>

<footer>

<p>© 2024 John Doe</p>

</footer>

</body>

</html>行为:

JsoupDocumentReader 处理 HTML 内容并根据配置创建 Document 对象:

-

selector决定用于文本提取的元素。 -

如果

allElements为true,则<body>中的所有文本都被提取到一个Document中。 -

如果

groupByElement为true,则匹配selector的每个元素都会创建一个单独的Document。 -

如果

allElements和groupByElement都不为true,则匹配selector的所有元素的文本将使用separator连接。 -

文档标题、指定

<meta>标签中的内容以及(可选)链接 URL 将添加到Document元数据中。 -

用于解析相对链接的基本 URI 将从 URL 资源中提取。

读取器保留选定元素的文本内容,但会删除其中的任何 HTML 标签。

Markdown

MarkdownDocumentReader 处理 Markdown 文档,将其转换为 Document 对象列表。

示例

@Component

class MyMarkdownReader {

private final Resource resource;

MyMarkdownReader(@Value("classpath:code.md") Resource resource) {

this.resource = resource;

}

List<Document> loadMarkdown() {

MarkdownDocumentReaderConfig config = MarkdownDocumentReaderConfig.builder()

.withHorizontalRuleCreateDocument(true)

.withIncludeCodeBlock(false)

.withIncludeBlockquote(false)

.withAdditionalMetadata("filename", "code.md")

.build();

MarkdownDocumentReader reader = new MarkdownDocumentReader(this.resource, config);

return reader.get();

}

}MarkdownDocumentReaderConfig 允许您自定义 MarkdownDocumentReader 的行为:

-

horizontalRuleCreateDocument:设置为true时,Markdown 中的水平线将创建新的Document对象。 -

includeCodeBlock:设置为true时,代码块将包含在与周围文本相同的Document中。设置为false时,代码块创建单独的Document对象。 -

includeBlockquote:设置为true时,引用块将包含在与周围文本相同的Document中。设置为false时,引用块创建单独的Document对象。 -

additionalMetadata:允许您向所有创建的Document对象添加自定义元数据。

示例文档:code.md

This is a Java sample application:

```java

package com.example.demo;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class DemoApplication {

public static void main(String[] args) {

SpringApplication.run(DemoApplication.class, args);

}

}

```

Markdown also provides the possibility to `use inline code formatting throughout` the entire sentence.

---

Another possibility is to set block code without specific highlighting:

```

./mvnw spring-javaformat:apply

```行为:MarkdownDocumentReader 根据配置处理 Markdown 内容并创建 Document 对象:

-

标题成为 Document 对象中的元数据。

-

段落成为 Document 对象的内容。

-

代码块可以分离到自己的 Document 对象中,也可以与周围文本一起包含。

-

引用块可以分离到自己的 Document 对象中,也可以与周围文本一起包含。

-

水平线可用于将内容拆分为单独的 Document 对象。

读取器保留 Document 对象内容中的内联代码、列表和文本样式等格式。

PDF 页面

PagePdfDocumentReader 使用 Apache PdfBox 库解析 PDF 文档

使用 Maven 或 Gradle 将依赖项添加到您的项目。

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-pdf-document-reader</artifactId>

</dependency>或添加到您的 Gradle build.gradle 构建文件。

dependencies {

implementation 'org.springframework.ai:spring-ai-pdf-document-reader'

}示例

@Component

public class MyPagePdfDocumentReader {

List<Document> getDocsFromPdf() {

PagePdfDocumentReader pdfReader = new PagePdfDocumentReader("classpath:/sample1.pdf",

PdfDocumentReaderConfig.builder()

.withPageTopMargin(0)

.withPageExtractedTextFormatter(ExtractedTextFormatter.builder()

.withNumberOfTopTextLinesToDelete(0)

.build())

.withPagesPerDocument(1)

.build());

return pdfReader.read();

}

}PDF 段落

ParagraphPdfDocumentReader 使用 PDF 目录(例如 TOC)信息将输入 PDF 拆分为文本段落,并为每个段落输出一个 Document。

注意:并非所有 PDF 文档都包含 PDF 目录。

依赖项

使用 Maven 或 Gradle 将依赖项添加到您的项目。

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-pdf-document-reader</artifactId>

</dependency>或添加到您的 Gradle build.gradle 构建文件。

dependencies {

implementation 'org.springframework.ai:spring-ai-pdf-document-reader'

}示例

@Component

public class MyPagePdfDocumentReader {

List<Document> getDocsFromPdfWithCatalog() {

ParagraphPdfDocumentReader pdfReader = new ParagraphPdfDocumentReader("classpath:/sample1.pdf",

PdfDocumentReaderConfig.builder()

.withPageTopMargin(0)

.withPageExtractedTextFormatter(ExtractedTextFormatter.builder()

.withNumberOfTopTextLinesToDelete(0)

.build())

.withPagesPerDocument(1)

.build());

return pdfReader.read();

}

}Tika (DOCX, PPTX, HTML…)

TikaDocumentReader 使用 Apache Tika 从各种文档格式(如 PDF、DOC/DOCX、PPT/PPTX 和 HTML)中提取文本。有关支持格式的完整列表,请参阅 Tika 文档。

依赖项

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-tika-document-reader</artifactId>

</dependency>或添加到您的 Gradle build.gradle 构建文件。

dependencies {

implementation 'org.springframework.ai:spring-ai-tika-document-reader'

}示例

@Component

class MyTikaDocumentReader {

private final Resource resource;

MyTikaDocumentReader(@Value("classpath:/word-sample.docx")

Resource resource) {

this.resource = resource;

}

List<Document> loadText() {

TikaDocumentReader tikaDocumentReader = new TikaDocumentReader(this.resource);

return tikaDocumentReader.read();

}

}转换器

令牌文本拆分器

TokenTextSplitter 是 TextSplitter 的一个实现,它使用 CL100K_BASE 编码根据令牌计数将文本拆分为块。

用法

@Component

class MyTokenTextSplitter {

public List<Document> splitDocuments(List<Document> documents) {

TokenTextSplitter splitter = new TokenTextSplitter();

return splitter.apply(documents);

}

public List<Document> splitCustomized(List<Document> documents) {

TokenTextSplitter splitter = new TokenTextSplitter(1000, 400, 10, 5000, true);

return splitter.apply(documents);

}

}构造函数选项

TokenTextSplitter 提供两个构造函数选项:

-

TokenTextSplitter():使用默认设置创建拆分器。 -

TokenTextSplitter(int defaultChunkSize, int minChunkSizeChars, int minChunkLengthToEmbed, int maxNumChunks, boolean keepSeparator)

参数

-

defaultChunkSize:每个文本块的目标大小(以令牌为单位)(默认值:800)。 -

minChunkSizeChars:每个文本块的最小大小(以字符为单位)(默认值:350)。 -

minChunkLengthToEmbed:要包含的块的最小长度(默认值:5)。 -

maxNumChunks:从文本生成的最大块数(默认值:10000)。 -

keepSeparator:是否在块中保留分隔符(如换行符)(默认值:true)。

行为

TokenTextSplitter 按如下方式处理文本内容:

-

它使用 CL100K_BASE 编码将输入文本编码为令牌。

-

它根据

defaultChunkSize将编码文本拆分为块。 -

对于每个块:[style="loweralpha"][style="loweralpha"][style="loweralpha"]

-

它将块解码回文本。

-

它尝试在

minChunkSizeChars之后找到一个合适的断点(句号、问号、感叹号或换行符)。 -

如果找到断点,则在该点截断块。

-

它根据

keepSeparator设置修剪块并可选地删除换行符。 -

如果结果块的长度大于

minChunkLengthToEmbed,则将其添加到输出中。

-

-

此过程继续进行,直到所有令牌都已处理或达到

maxNumChunks。 -

任何剩余的文本如果长度大于

minChunkLengthToEmbed,则作为最终块添加。

示例

Document doc1 = new Document("This is a long piece of text that needs to be split into smaller chunks for processing.",

Map.of("source", "example.txt"));

Document doc2 = new Document("Another document with content that will be split based on token count.",

Map.of("source", "example2.txt"));

TokenTextSplitter splitter = new TokenTextSplitter();

List<Document> splitDocuments = this.splitter.apply(List.of(this.doc1, this.doc2));

for (Document doc : splitDocuments) {

System.out.println("Chunk: " + doc.getContent());

System.out.println("Metadata: " + doc.getMetadata());

}关键字元数据增强器

KeywordMetadataEnricher 是一个 DocumentTransformer,它使用生成式 AI 模型从文档内容中提取关键字并将其添加为元数据。

用法

@Component

class MyKeywordEnricher {

private final ChatModel chatModel;

MyKeywordEnricher(ChatModel chatModel) {

this.chatModel = chatModel;

}

List<Document> enrichDocuments(List<Document> documents) {

KeywordMetadataEnricher enricher = KeywordMetadataEnricher.builder(chatModel)

.keywordCount(5)

.build();

// 或者使用自定义模板

KeywordMetadataEnricher enricher = KeywordMetadataEnricher.builder(chatModel)

.keywordsTemplate(YOUR_CUSTOM_TEMPLATE)

.build();

return enricher.apply(documents);

}

}构造函数选项

KeywordMetadataEnricher 提供两个构造函数选项:

-

KeywordMetadataEnricher(ChatModel chatModel, int keywordCount):使用默认模板并提取指定数量的关键字。 -

KeywordMetadataEnricher(ChatModel chatModel, PromptTemplate keywordsTemplate):使用自定义模板进行关键字提取。

行为

KeywordMetadataEnricher 按如下方式处理文档:

-

对于每个输入文档,它使用文档内容创建一个提示。

-

它将此提示发送到提供的

ChatModel以生成关键字。 -

生成的关键字将添加到文档的元数据中,键为 "excerpt_keywords"。

-

返回增强后的文档。

自定义

您可以使用默认模板,也可以通过 keywordsTemplate 参数自定义模板。

默认模板是:

\{context_str}. Give %s unique keywords for this document. Format as comma separated. Keywords:其中 {context_str} 被文档内容替换,%s 被指定的关键字计数替换。

示例

ChatModel chatModel = // 初始化您的聊天模型

KeywordMetadataEnricher enricher = KeywordMetadataEnricher.builder(chatModel)

.keywordCount(5)

.build();

// 或者使用自定义模板

KeywordMetadataEnricher enricher = KeywordMetadataEnricher.builder(chatModel)

.keywordsTemplate(new PromptTemplate("Extract 5 important keywords from the following text and separate them with commas:\n{context_str}"))

.build();

Document doc = new Document("This is a document about artificial intelligence and its applications in modern technology.");

List<Document> enrichedDocs = enricher.apply(List.of(this.doc));

Document enrichedDoc = this.enrichedDocs.get(0);

String keywords = (String) this.enrichedDoc.getMetadata().get("excerpt_keywords");

System.out.println("Extracted keywords: " + keywords);摘要元数据增强器

SummaryMetadataEnricher 是一个 DocumentTransformer,它使用生成式 AI 模型为文档创建摘要并将其添加为元数据。它可以为当前文档以及相邻文档(前一个和下一个)生成摘要。

用法

@Configuration

class EnricherConfig {

@Bean

public SummaryMetadataEnricher summaryMetadata(OpenAiChatModel aiClient) {

return new SummaryMetadataEnricher(aiClient,

List.of(SummaryType.PREVIOUS, SummaryType.CURRENT, SummaryType.NEXT));

}

}

@Component

class MySummaryEnricher {

private final SummaryMetadataEnricher enricher;

MySummaryEnricher(SummaryMetadataEnricher enricher) {

this.enricher = enricher;

}

List<Document> enrichDocuments(List<Document> documents) {

return this.enricher.apply(documents);

}

}构造函数

SummaryMetadataEnricher 提供两个构造函数:

-

SummaryMetadataEnricher(ChatModel chatModel, List<SummaryType> summaryTypes) -

SummaryMetadataEnricher(ChatModel chatModel, List<SummaryType> summaryTypes, String summaryTemplate, MetadataMode metadataMode)

参数

-

chatModel:用于生成摘要的 AI 模型。 -

summaryTypes:SummaryType枚举值的列表,指示要生成哪些摘要(PREVIOUS、CURRENT、NEXT)。 -

summaryTemplate:用于摘要生成的自定义模板(可选)。 -

metadataMode:指定在生成摘要时如何处理文档元数据(可选)。

行为

SummaryMetadataEnricher 按如下方式处理文档:

-

对于每个输入文档,它使用文档内容和指定的摘要模板创建一个提示。

-

它将此提示发送到提供的

ChatModel以生成摘要。 -

根据指定的

summaryTypes,它将以下元数据添加到每个文档中:-

section_summary:当前文档的摘要。 -

prev_section_summary:前一个文档的摘要(如果可用且已请求)。 -

next_section_summary:下一个文档的摘要(如果可用且已请求)。

-

-

返回增强后的文档。

自定义

可以通过提供自定义 summaryTemplate 来自定义摘要生成提示。默认模板是:

"""

Here is the content of the section:

{context_str}

Summarize the key topics and entities of the section.

Summary:

"""示例

ChatModel chatModel = // 初始化您的聊天模型

SummaryMetadataEnricher enricher = new SummaryMetadataEnricher(chatModel,

List.of(SummaryType.PREVIOUS, SummaryType.CURRENT, SummaryType.NEXT));

Document doc1 = new Document("Content of document 1");

Document doc2 = new Document("Content of document 2");

List<Document> enrichedDocs = enricher.apply(List.of(this.doc1, this.doc2));

// 检查增强文档的元数据

for (Document doc : enrichedDocs) {

System.out.println("Current summary: " + doc.getMetadata().get("section_summary"));

System.out.println("Previous summary: " + doc.getMetadata().get("prev_section_summary"));

System.out.println("Next summary: " + doc.getMetadata().get("next_section_summary"));

}提供的示例演示了预期的行为:

-

对于两个文档的列表,两个文档都收到

section_summary。 -

第一个文档收到

next_section_summary,但没有prev_section_summary。 -

第二个文档收到

prev_section_summary,但没有next_section_summary。 -

第一个文档的

section_summary与第二个文档的prev_section_summary匹配。 -

第一个文档的

next_section_summary与第二个文档的section_summary匹配。

写入器

文件

FileDocumentWriter 是 DocumentWriter 的一个实现,它将 Document 对象列表的内容写入文件。

用法

@Component

class MyDocumentWriter {

public void writeDocuments(List<Document> documents) {

FileDocumentWriter writer = new FileDocumentWriter("output.txt", true, MetadataMode.ALL, false);

writer.accept(documents);

}

}构造函数

FileDocumentWriter 提供三个构造函数:

-

FileDocumentWriter(String fileName) -

FileDocumentWriter(String fileName, boolean withDocumentMarkers) -

FileDocumentWriter(String fileName, boolean withDocumentMarkers, MetadataMode metadataMode, boolean append)

参数

-

fileName:要写入文档的文件名。 -

withDocumentMarkers:是否在输出中包含文档标记(默认值:false)。 -

metadataMode:指定要写入文件的文档内容(默认值:MetadataMode.NONE)。 -

append:如果为 true,数据将写入文件末尾而不是开头(默认值:false)。

行为

FileDocumentWriter 按如下方式处理文档:

-

它为指定的文件名打开一个 FileWriter。

-

对于输入列表中的每个文档:[style="loweralpha"][style="loweralpha"][style="loweralpha"]

-

如果

withDocumentMarkers为 true,它会写入一个文档标记,包括文档索引和页码。 -

它根据指定的

metadataMode写入文档的格式化内容。

-

-

所有文档写入后,文件将关闭。

文档标记

当 withDocumentMarkers 设置为 true 时,写入器将为每个文档包含以下格式的标记:

### Doc: [index], pages:[start_page_number,end_page_number]向量存储

提供与各种向量存储的集成。 有关完整列表,请参阅 向量数据库文档。